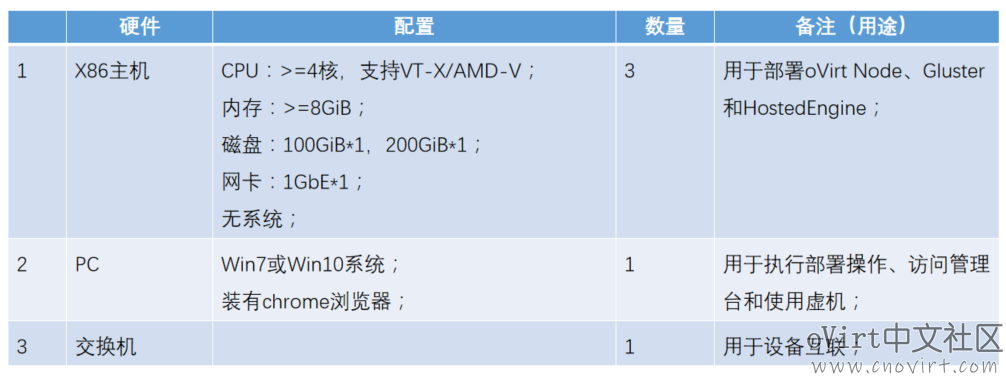

硬件环境准备

- 为演示和截图方便,这次实验使用的是oVirt中的虚机;

- 此处列出的主机配置为最低配置要求;

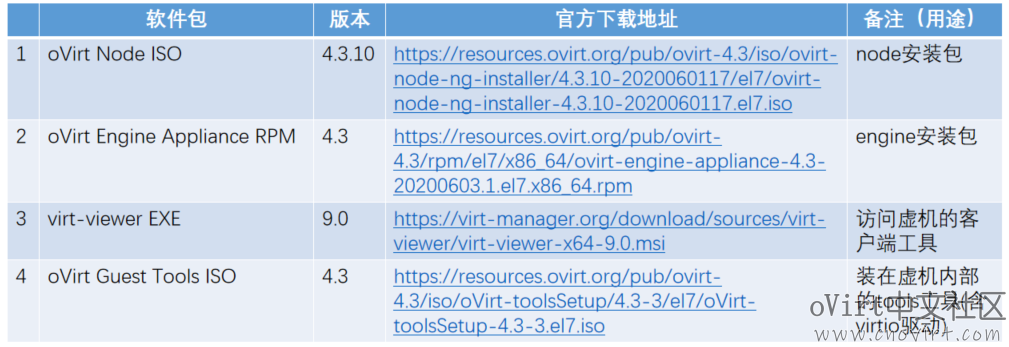

软件环境准备

百度网盘下载地址:(本站->安装包下载)

链接:https://pan.baidu.com/s/10HarsPsucmovfF-jDH4aEw

提取码:pu1s

备用下载地址:

网络信息规划

- 此处的配置信息根据你的实际环境规划和配置;

- 如果部署的此套环境与外网相连,则务必确保所规划的域名不能在外网解析(部署前不能ping通才可以);

- 注意这里是实验环境的最低配置要求,生产环境下存储网和业务网应使用独立的网络,且存储网不低于10GbE,可多个网口做绑定;

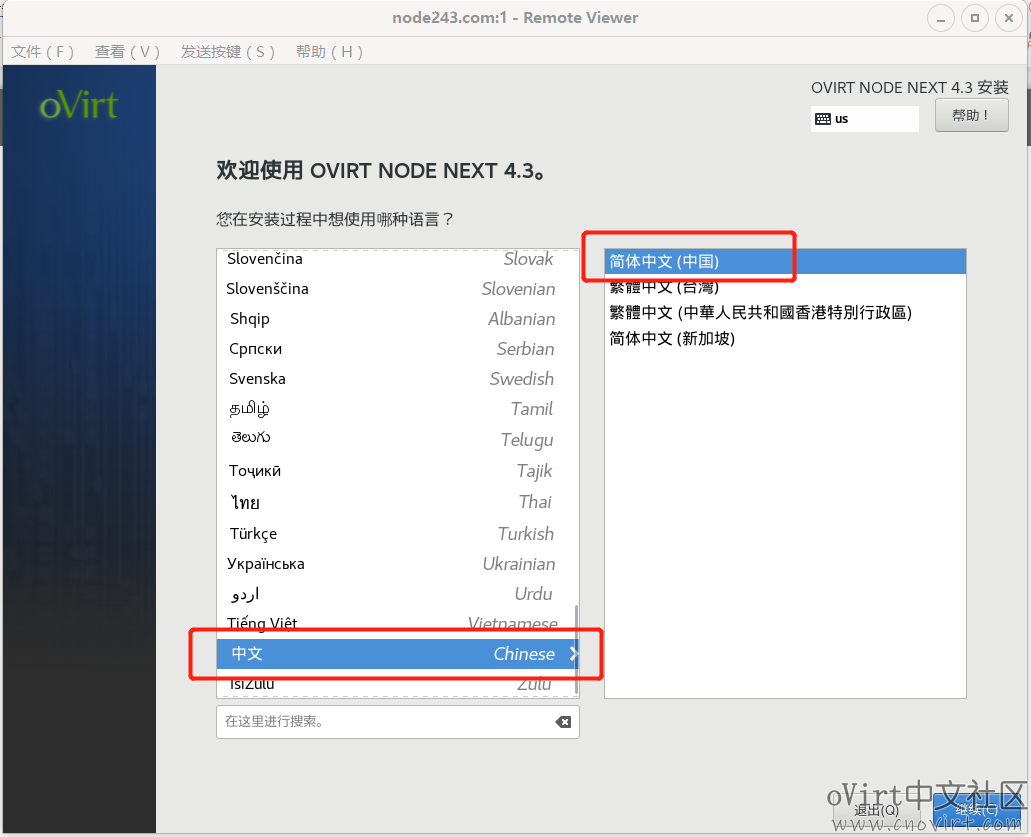

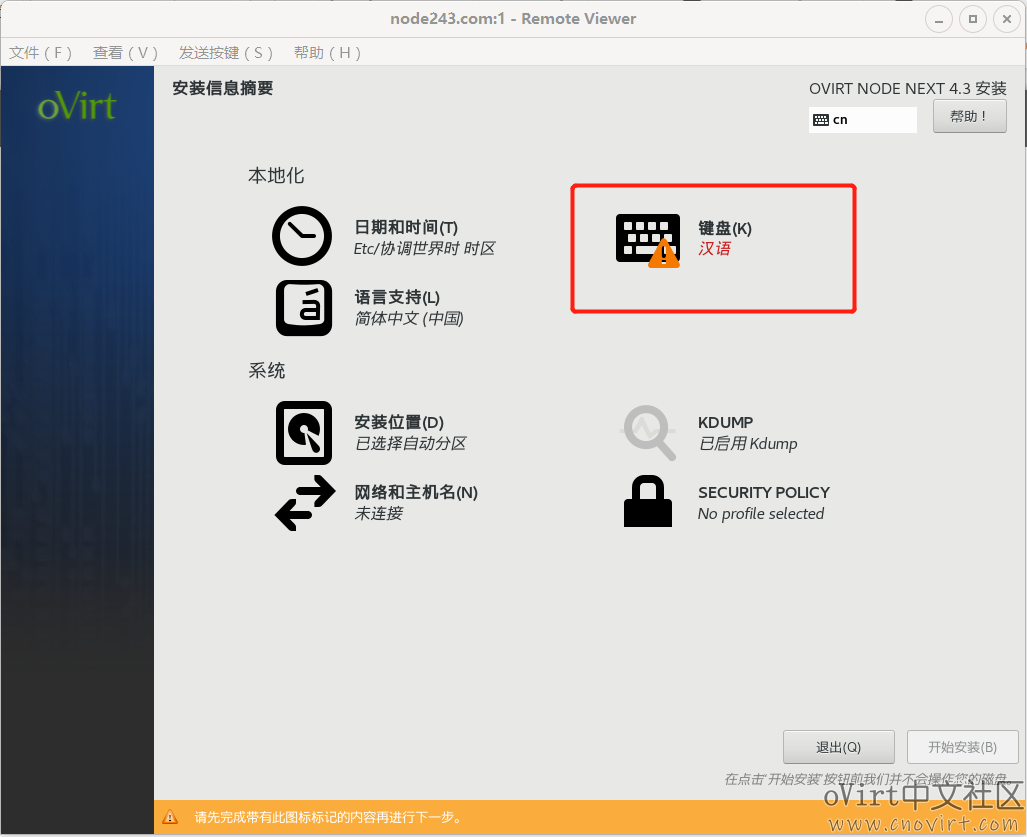

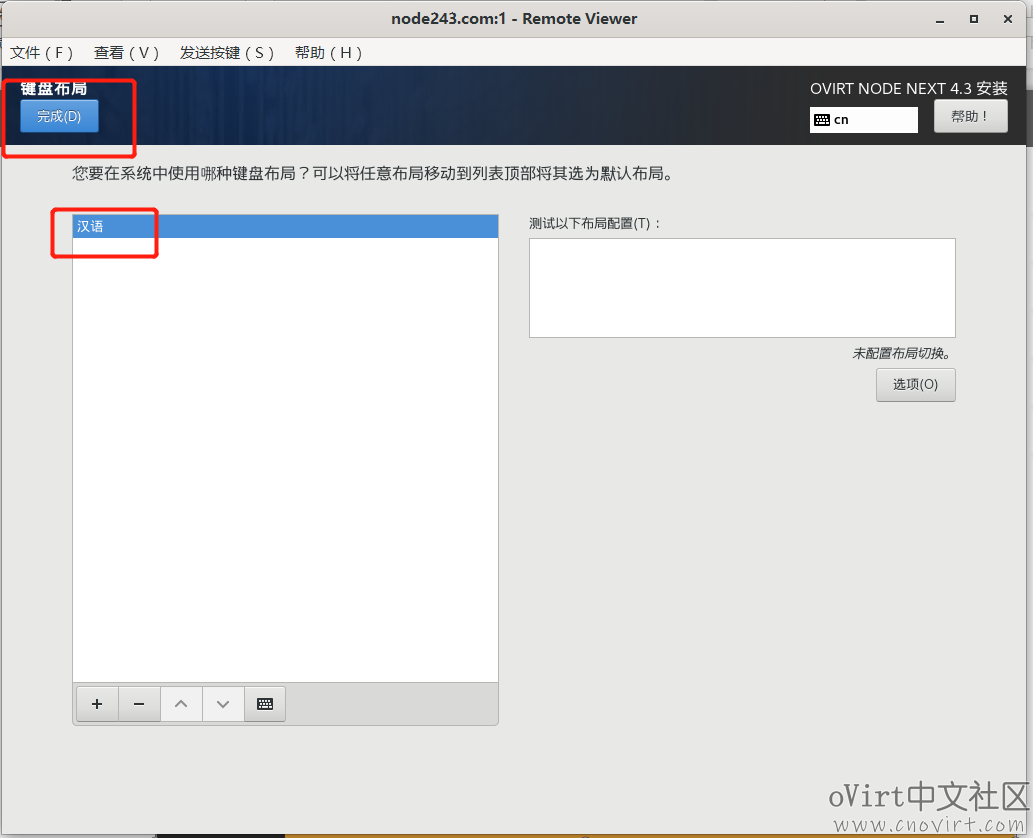

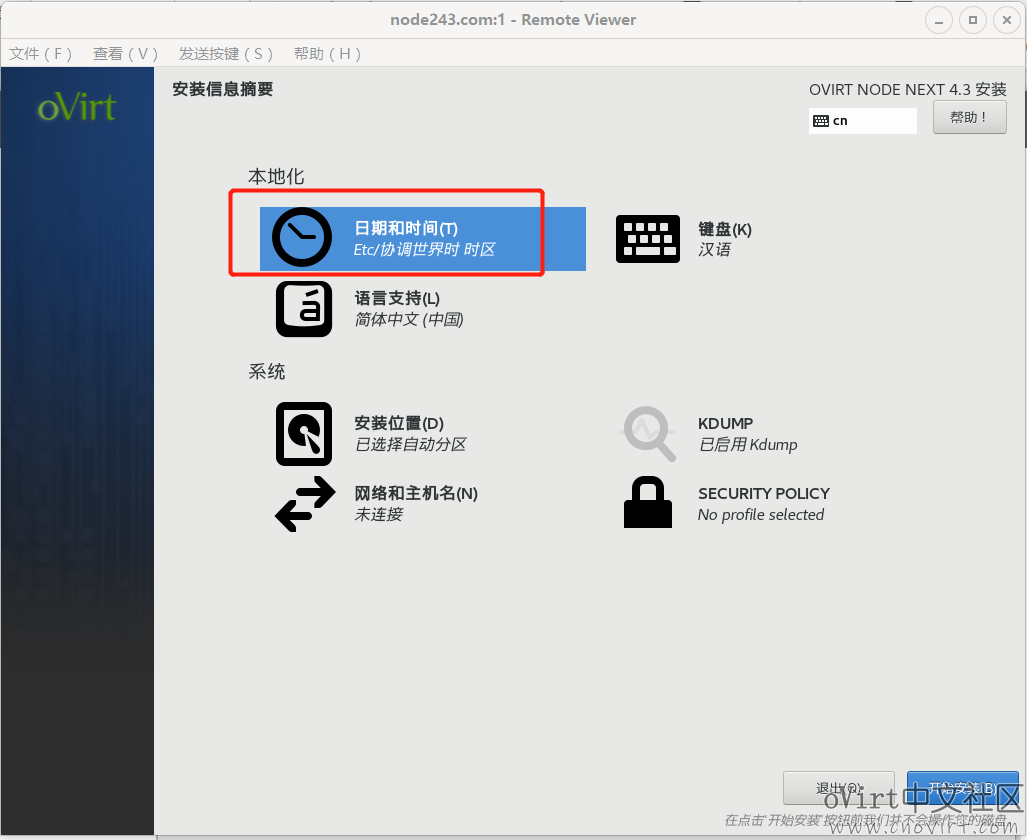

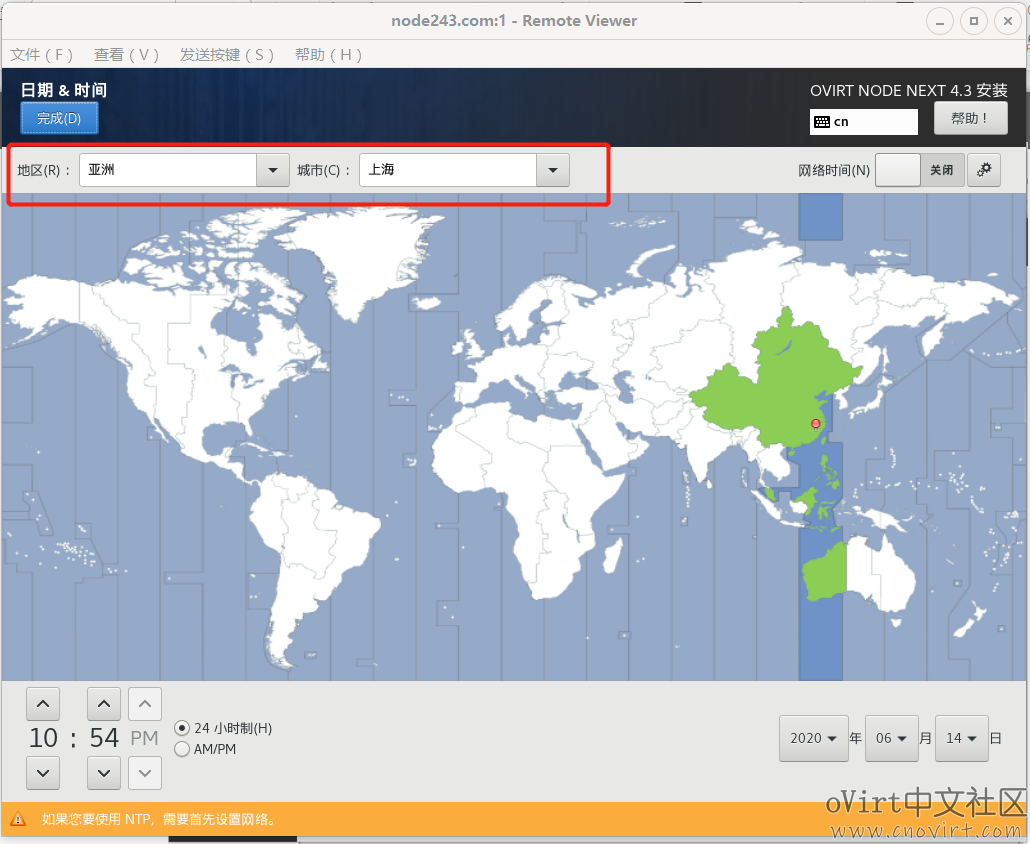

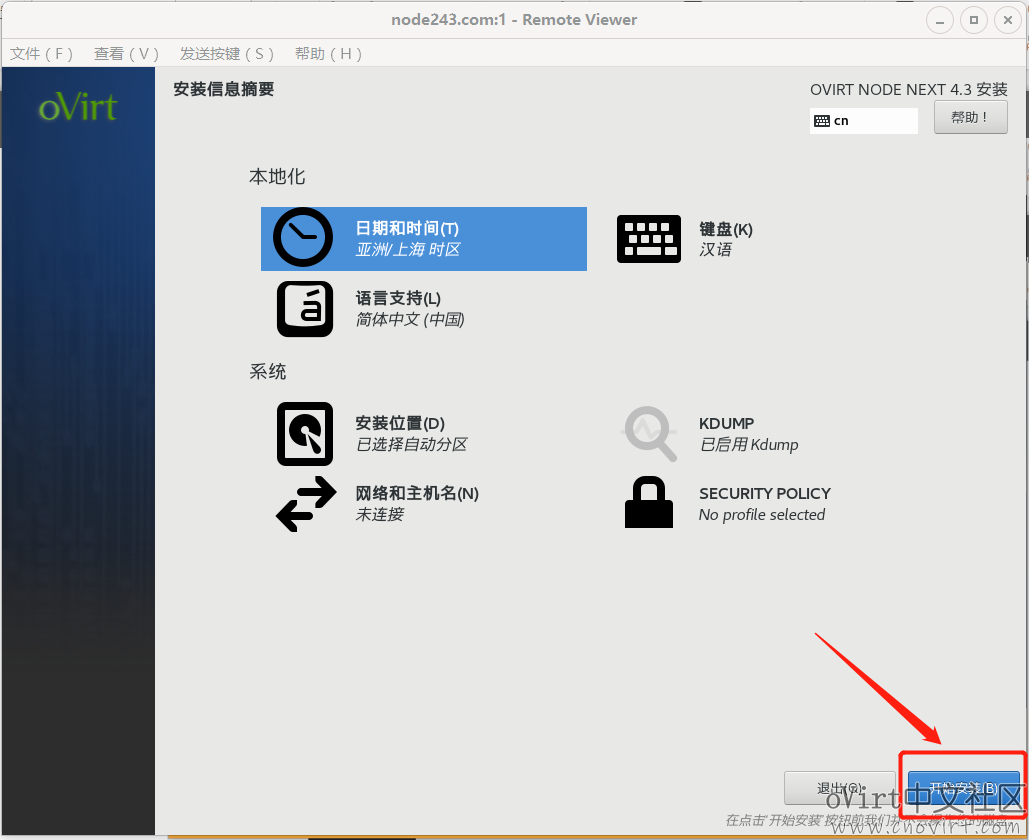

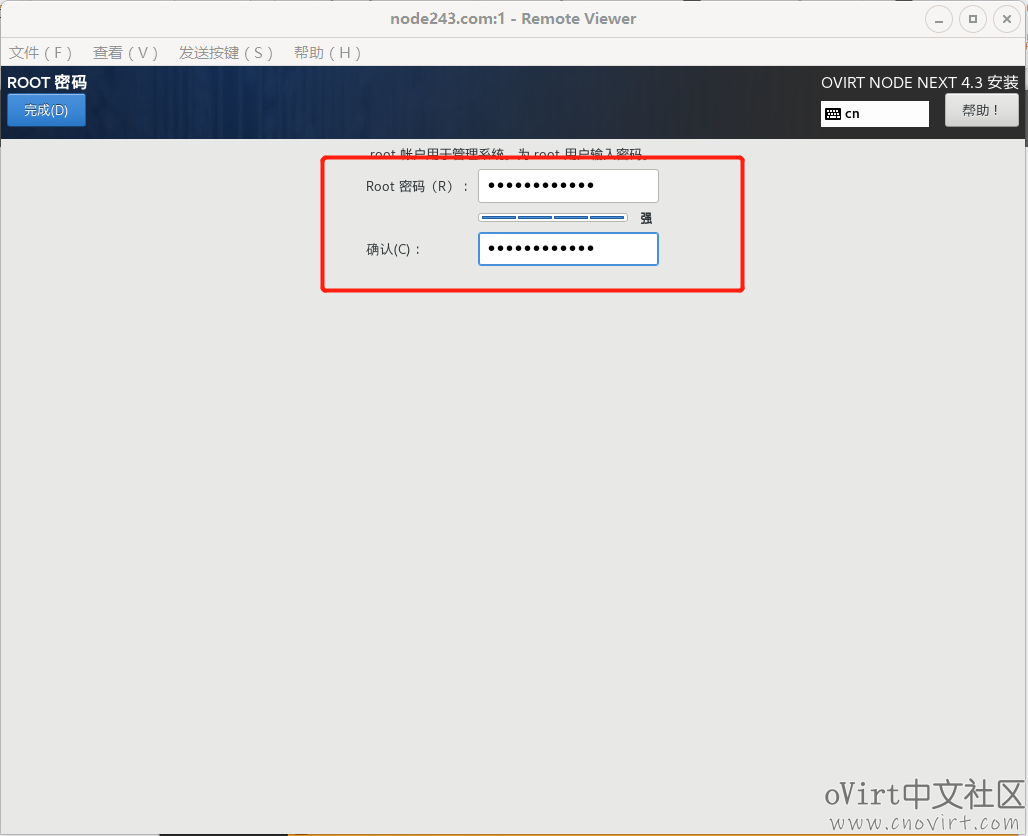

部署过程——安装Node

将oVirt Node ISO刻录到光盘,分别将3台主机从CD引导安装:

这里选择100GiB大小的磁盘作为系统盘:

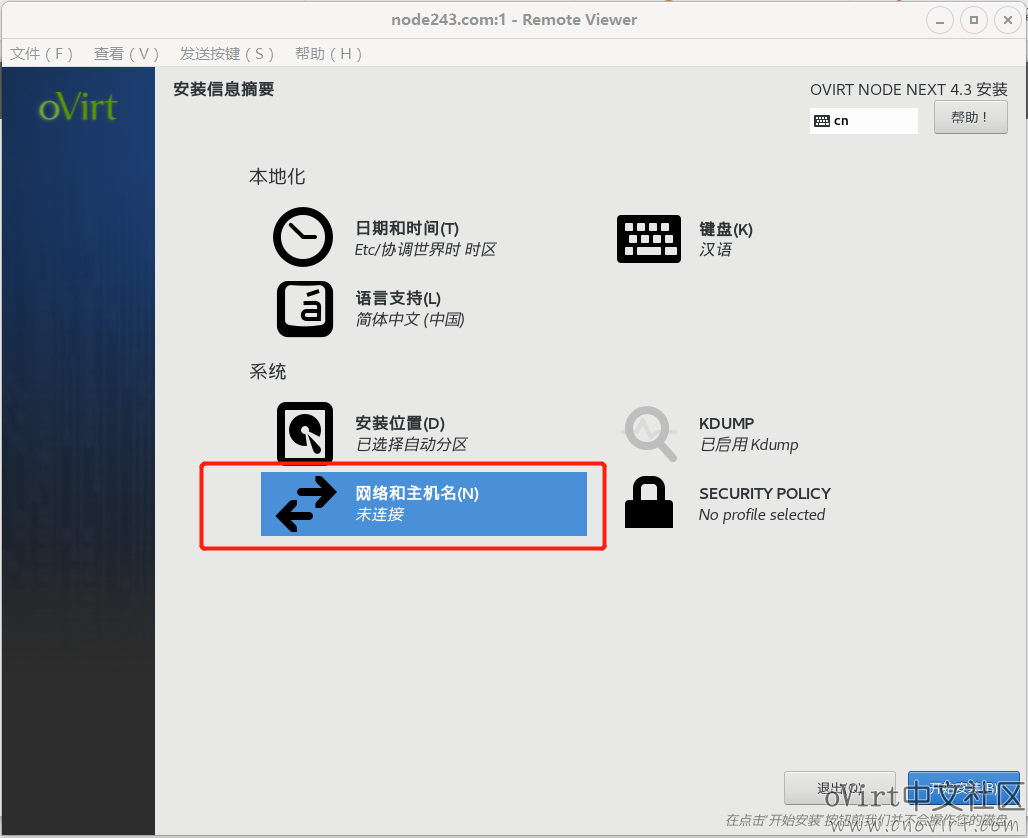

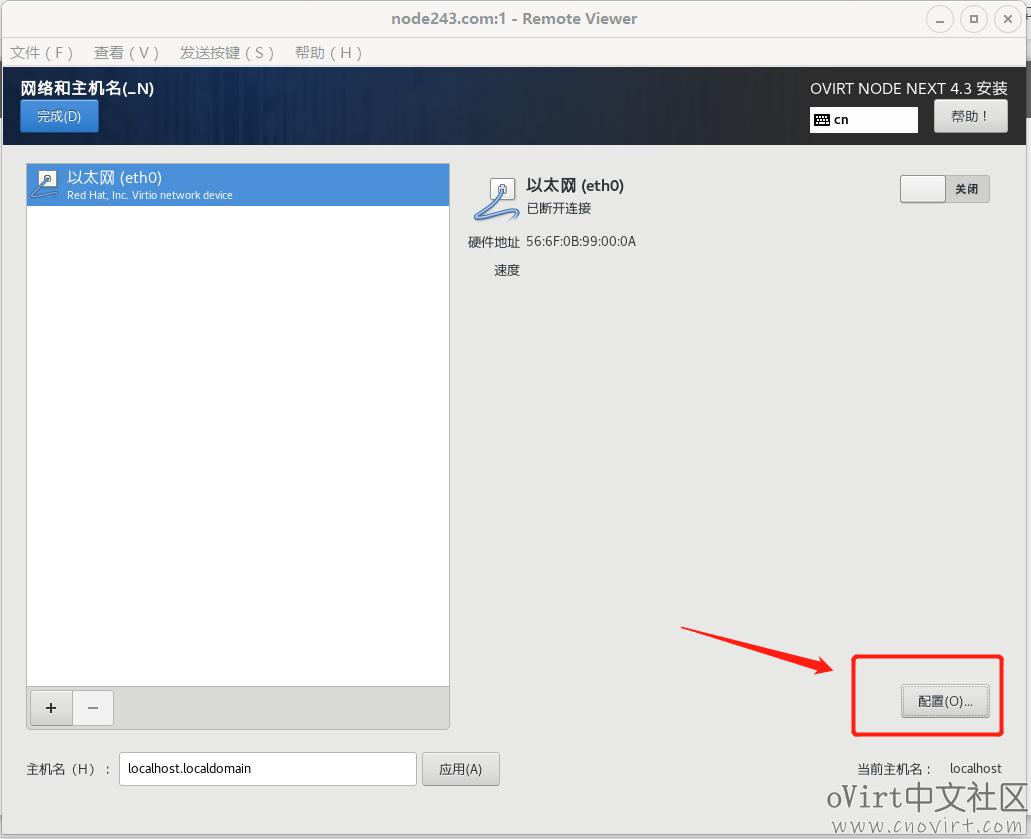

配置网络:

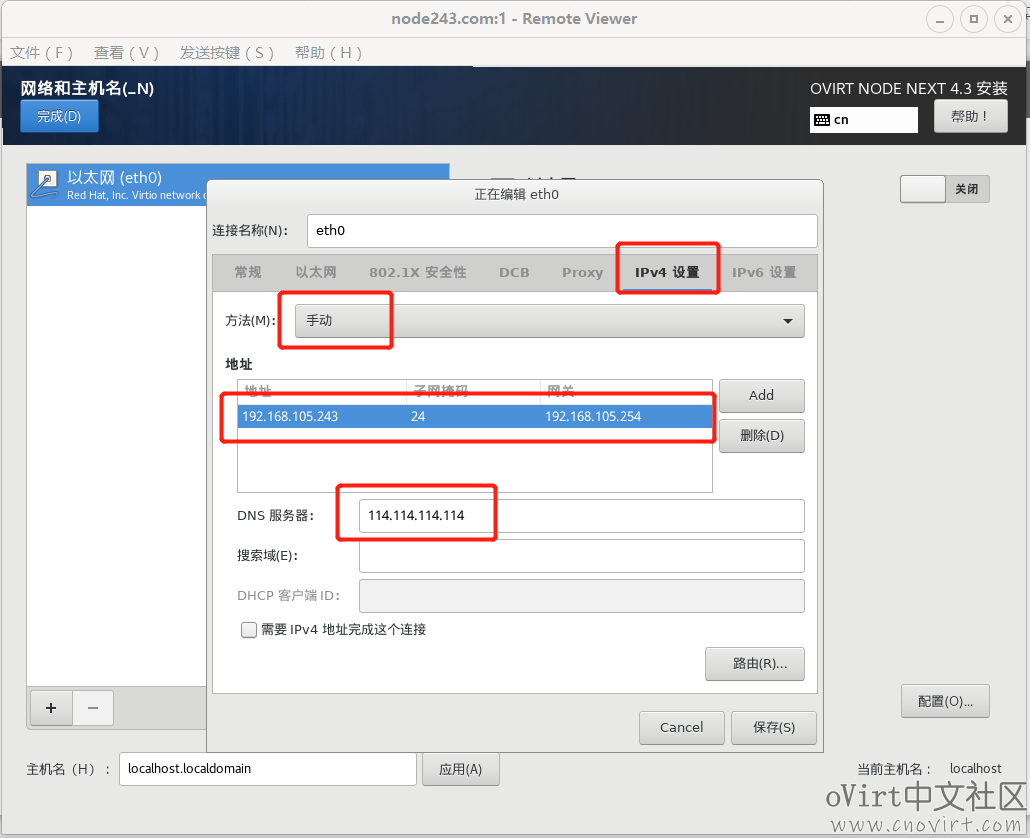

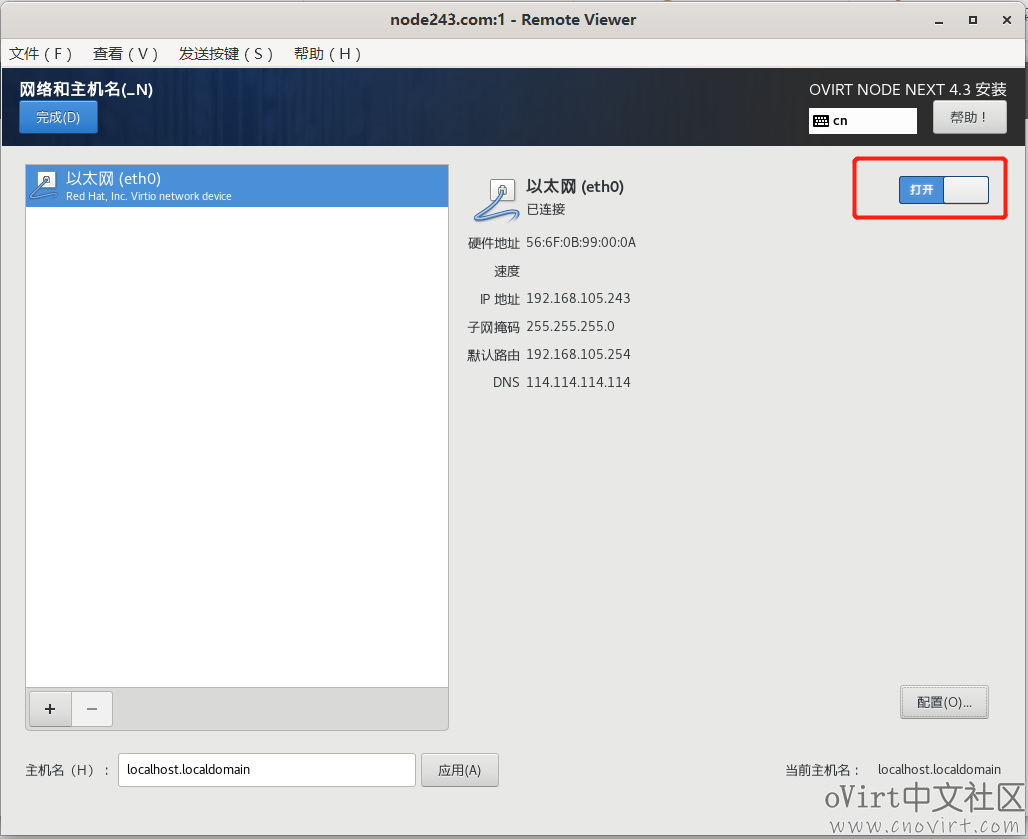

这里配置静态ip地址:

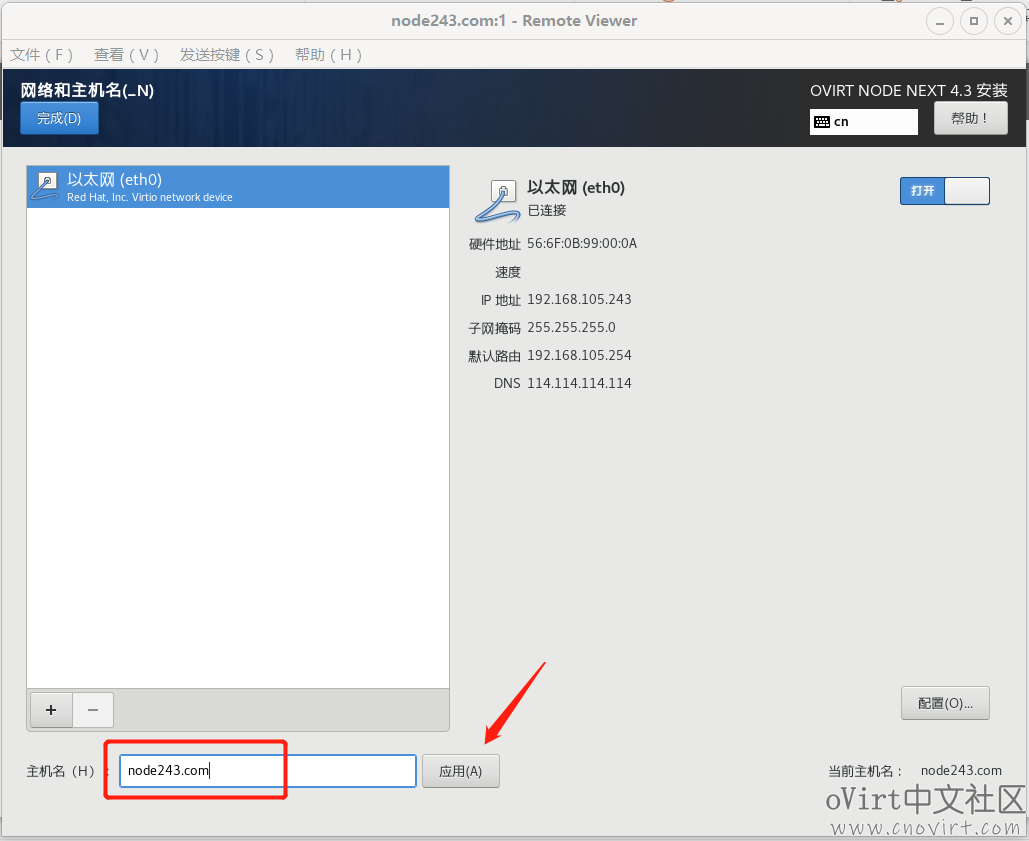

配置域名:

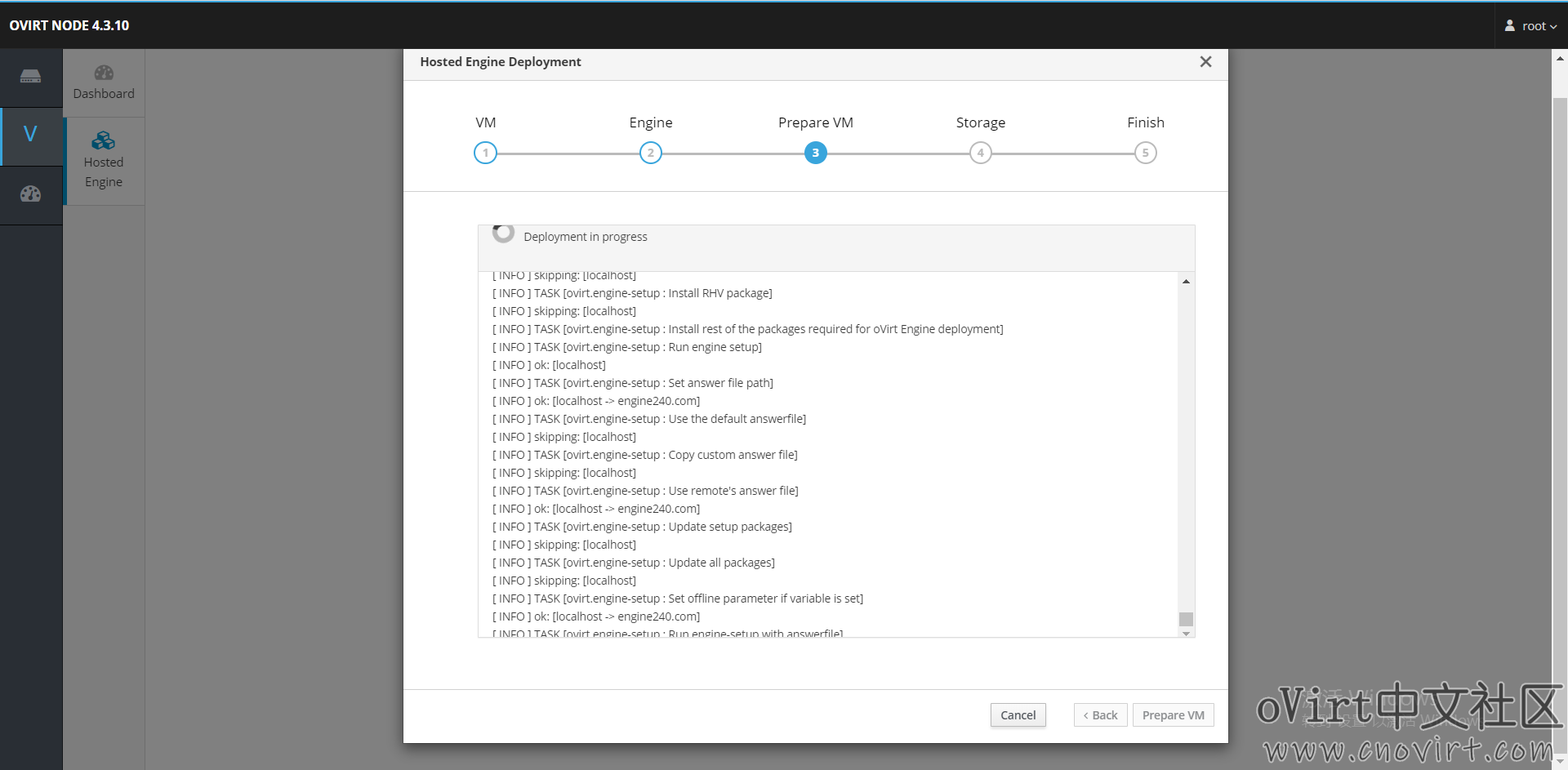

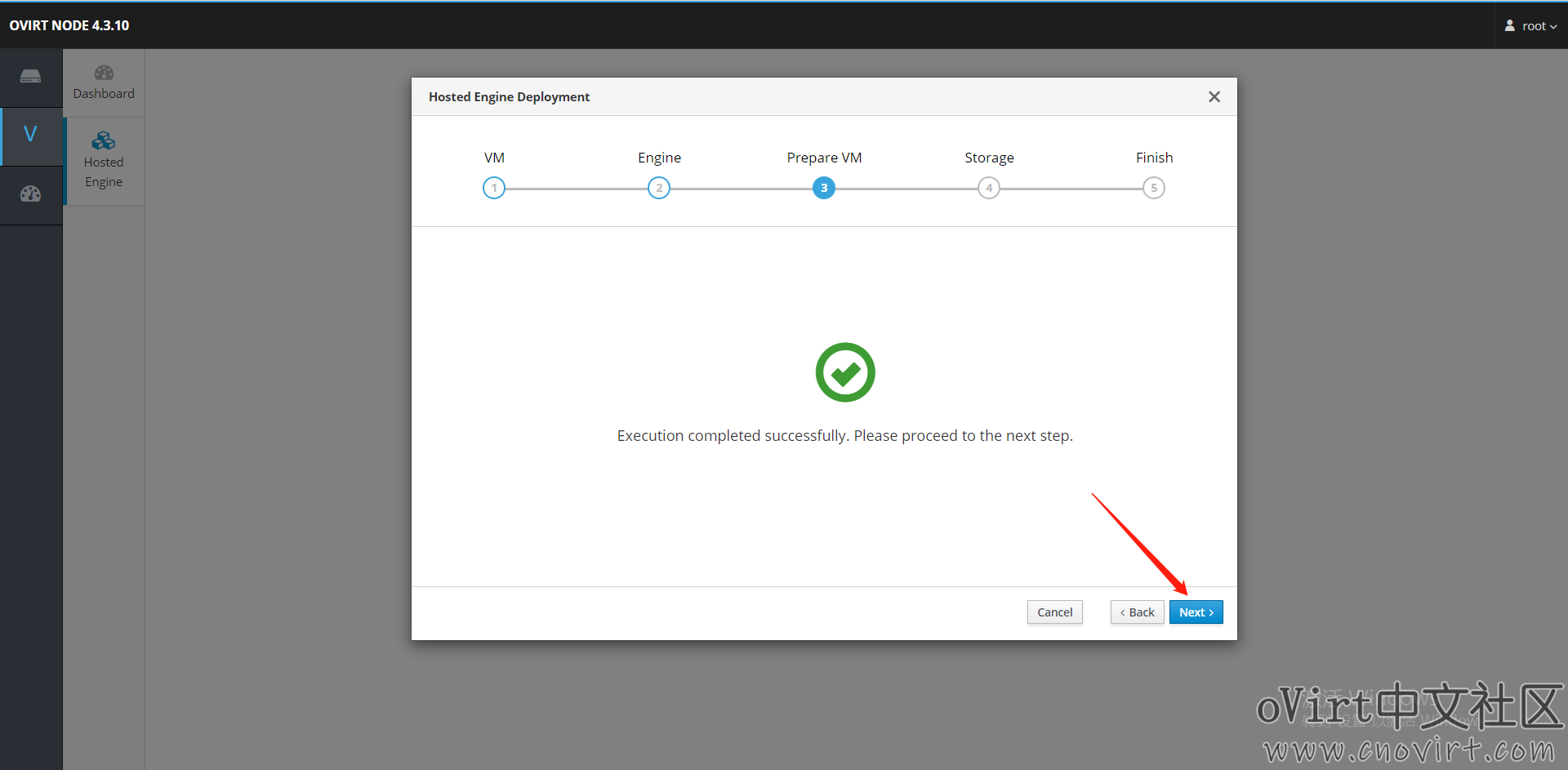

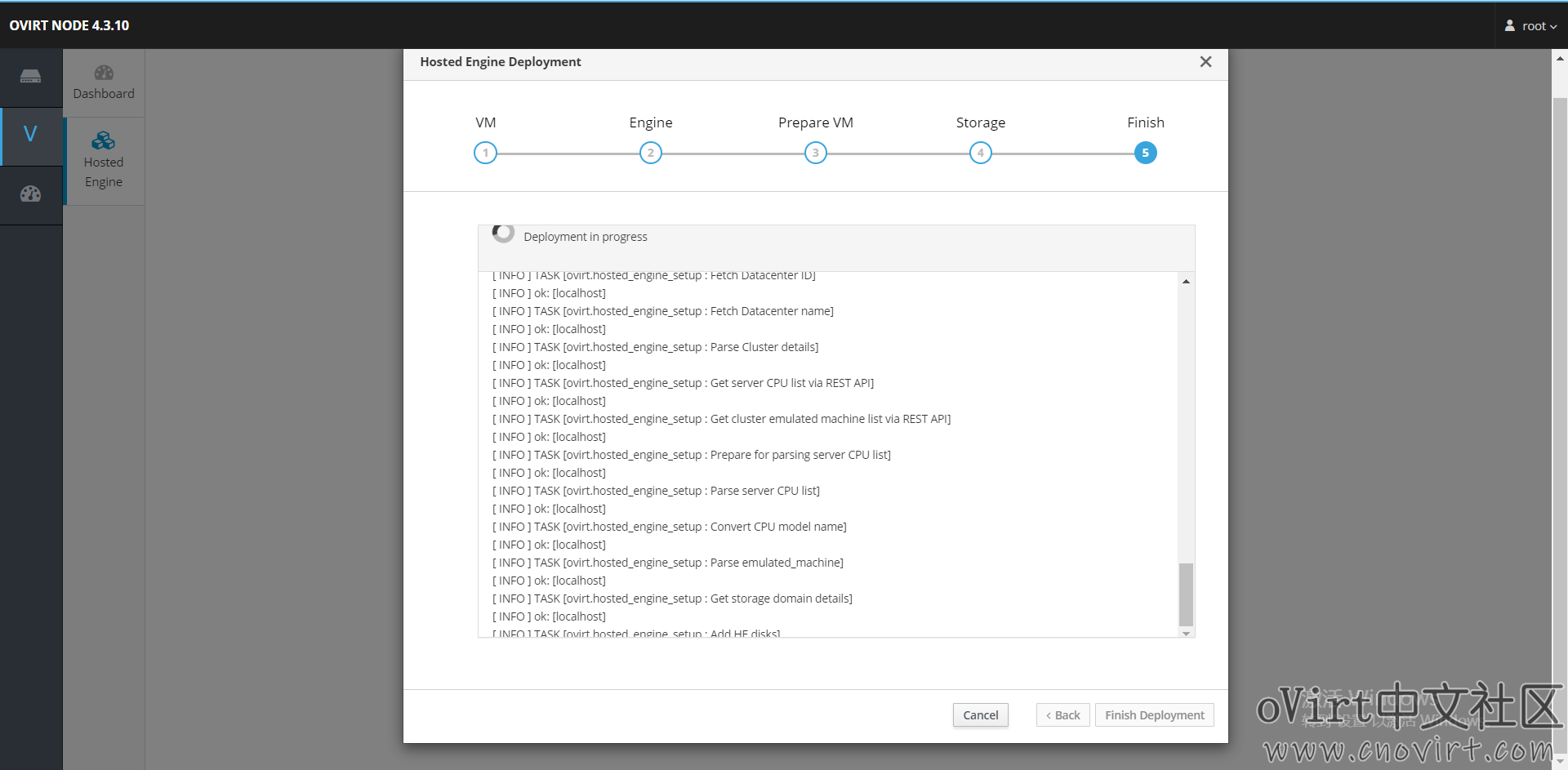

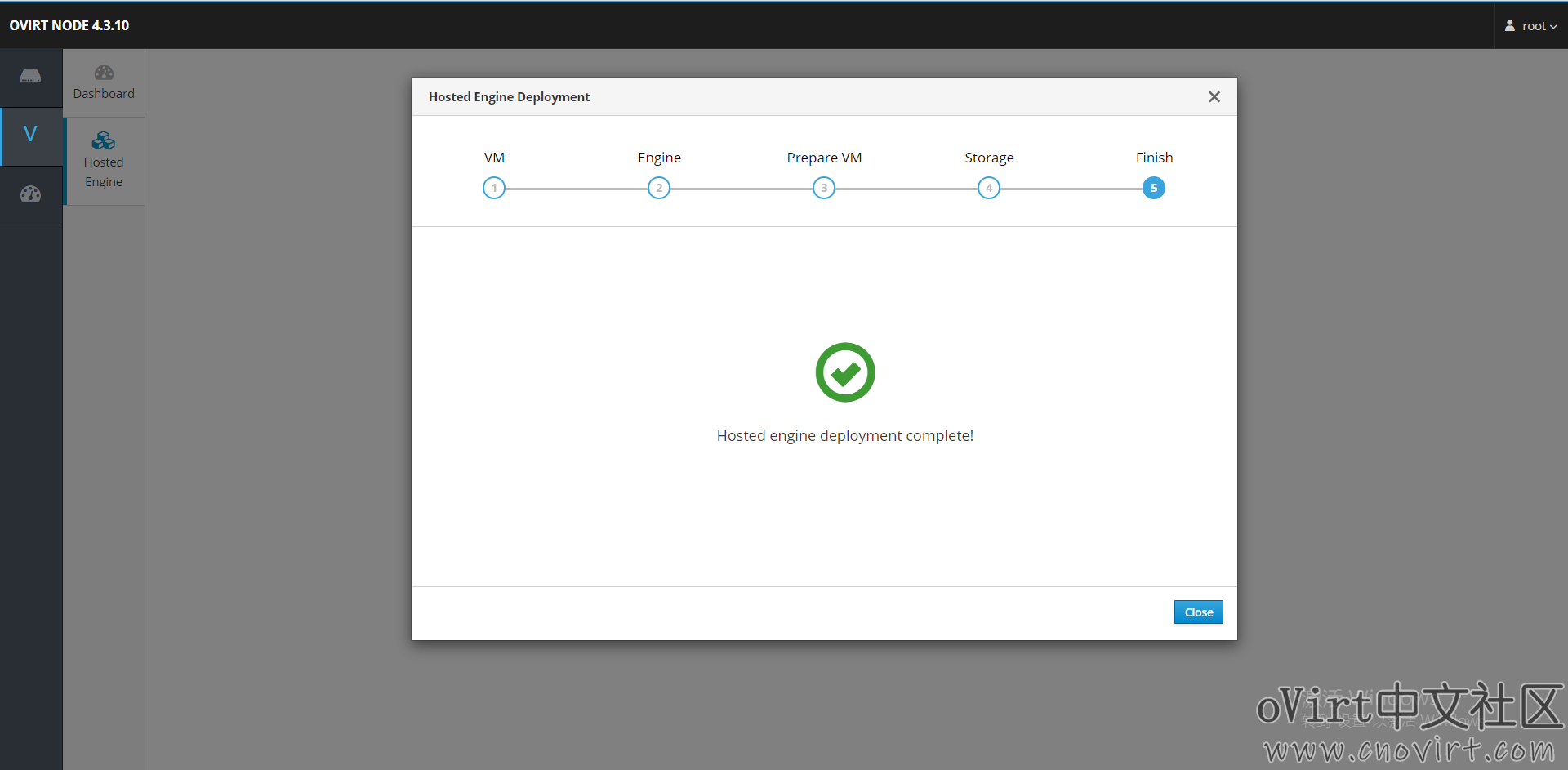

部署过程——部署HostedEngine

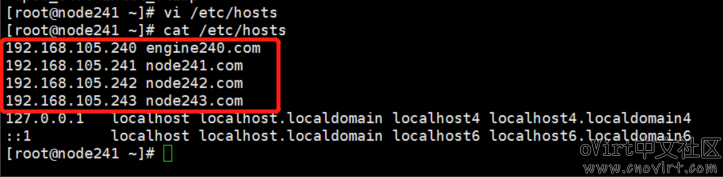

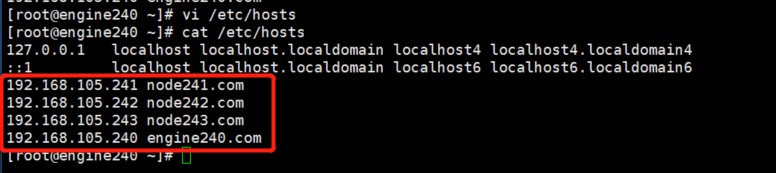

在3台node节点上的/etc/hosts里分别加上3台node节点和engine的域名解析:

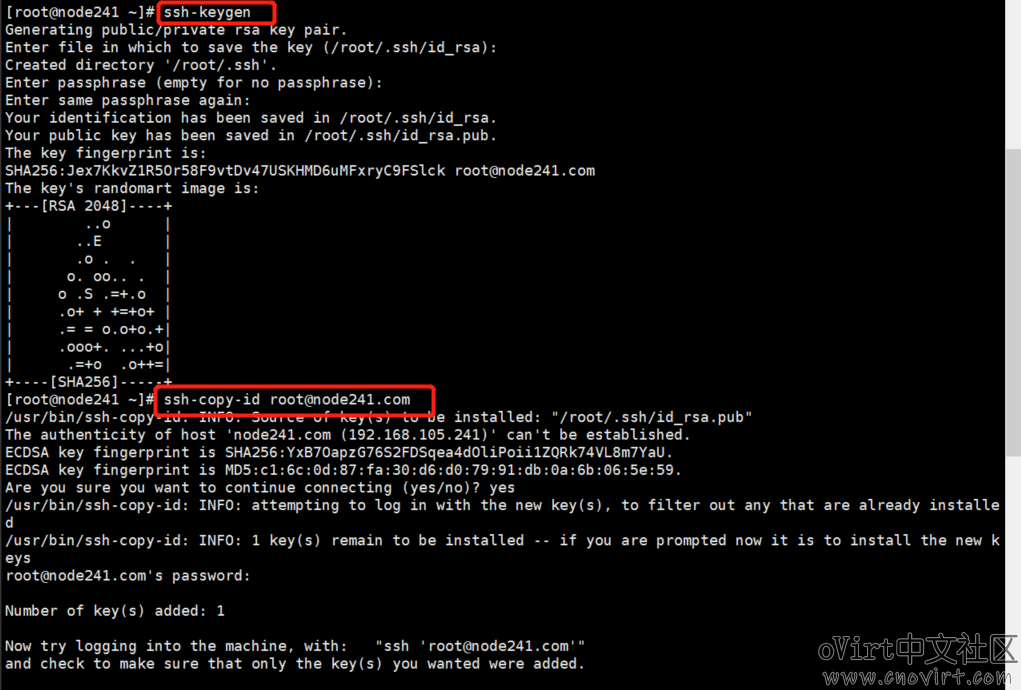

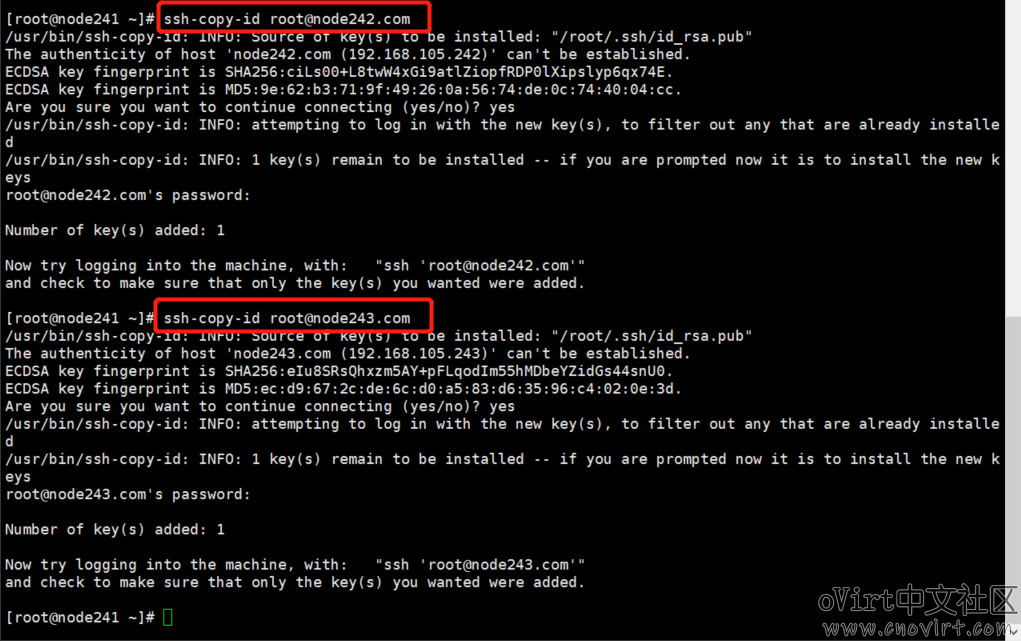

配置SSH无密码登录,注意要在使用Cockpit执行HostedEngine部署的那台机器上配置:

# ssh-keygen

# ssh-copy-id root@node241.com

# ssh-copy-id root@node242.com

# ssh-copy-id root@node243.com

过程如下图:

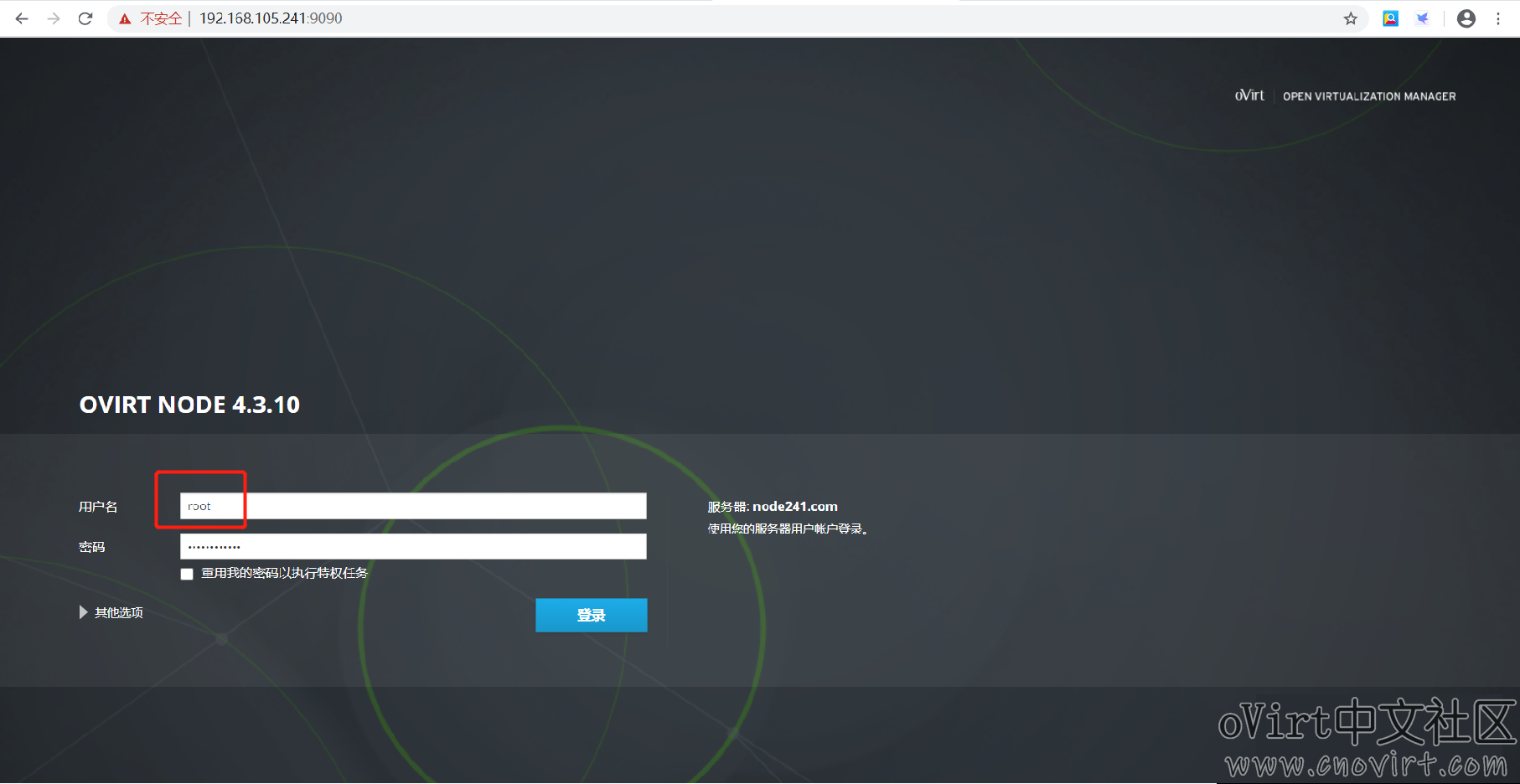

访问Node的Cockpit门户,地址:https://192.168.105.241:9090,注意端口为9090

使用root登录,密码为安装Node时所配置

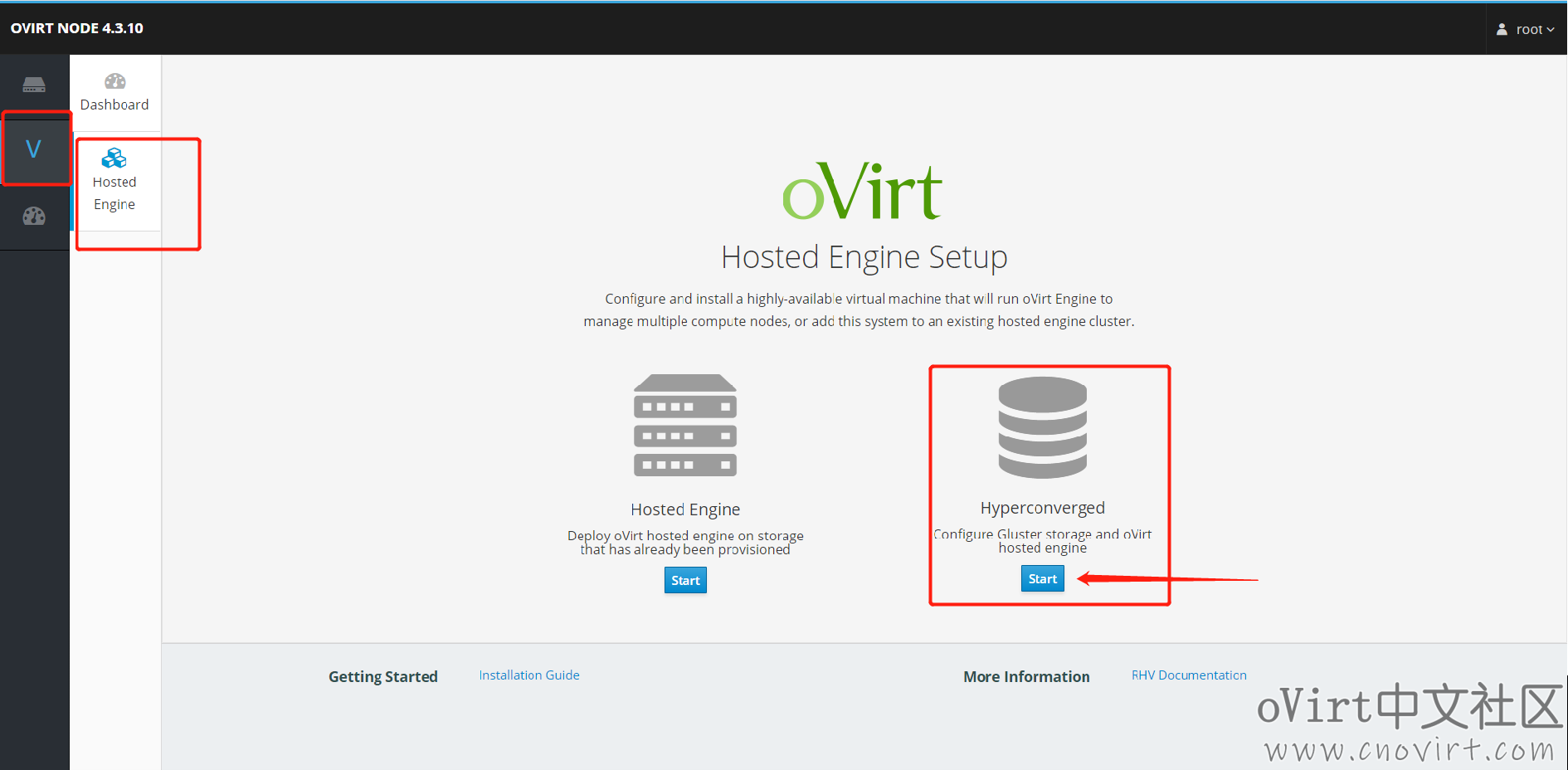

进入HostedEngine页面,选择超融合:

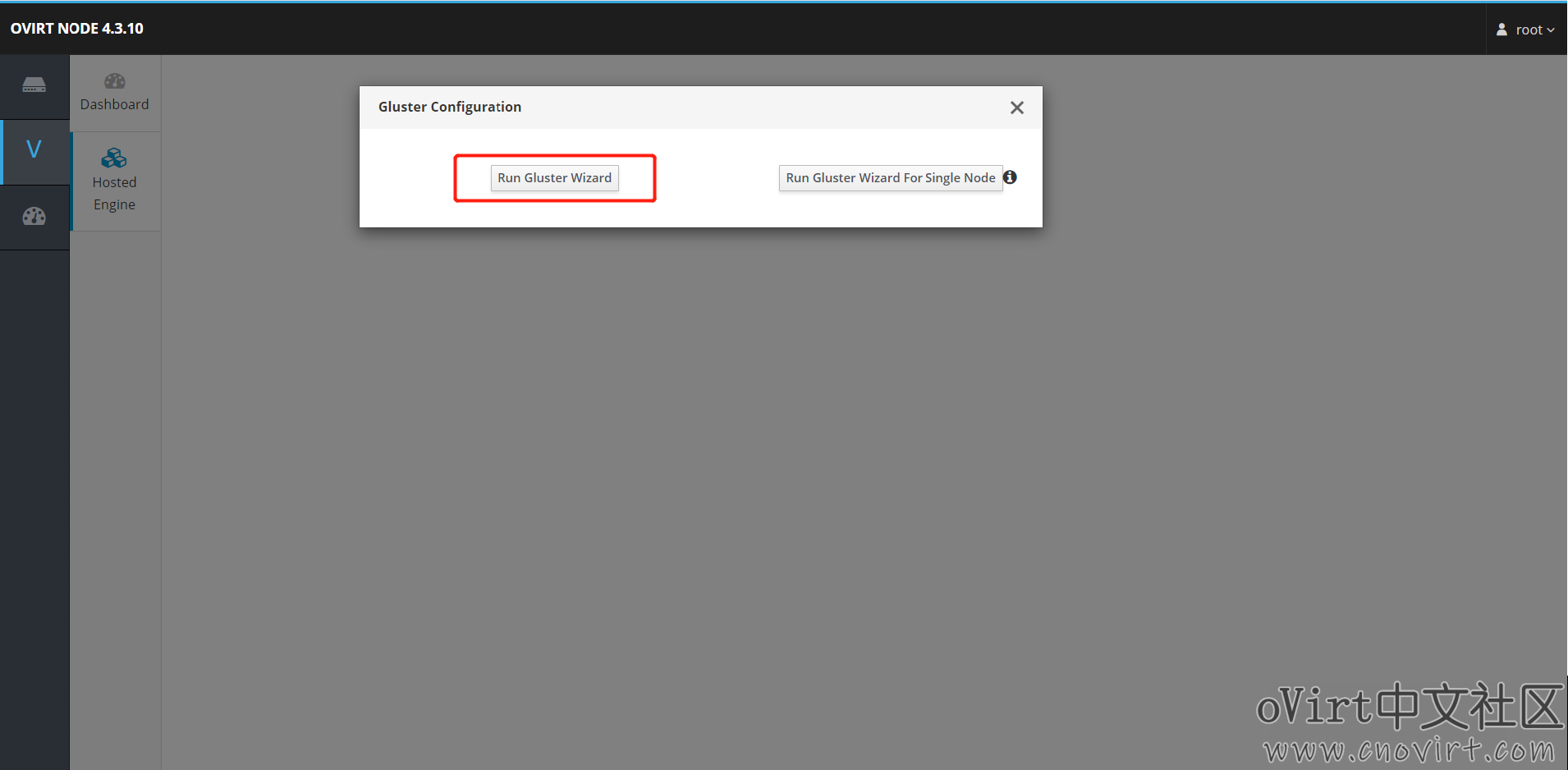

选择第一个向导:

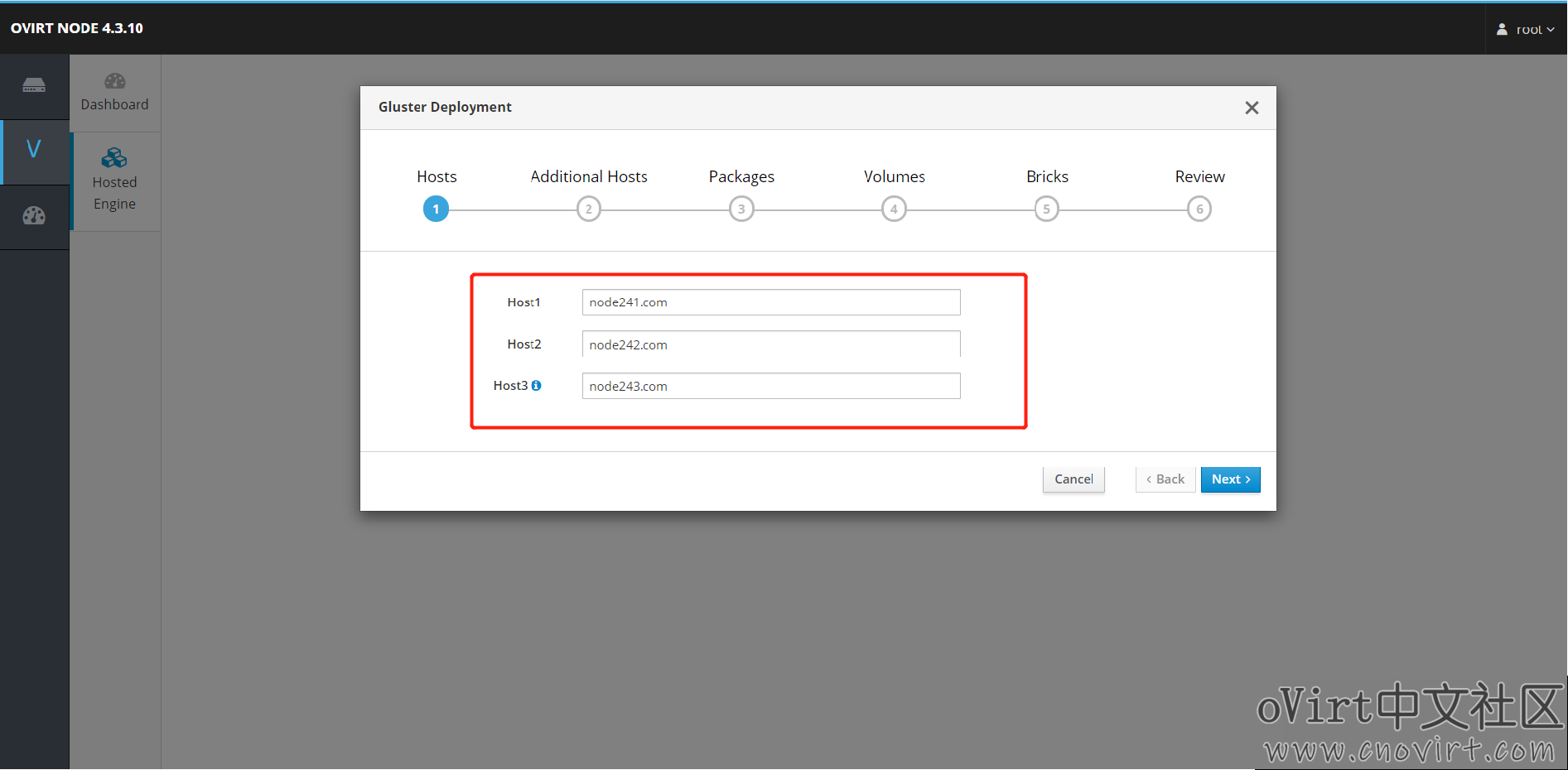

此处输入3台主机的域名:

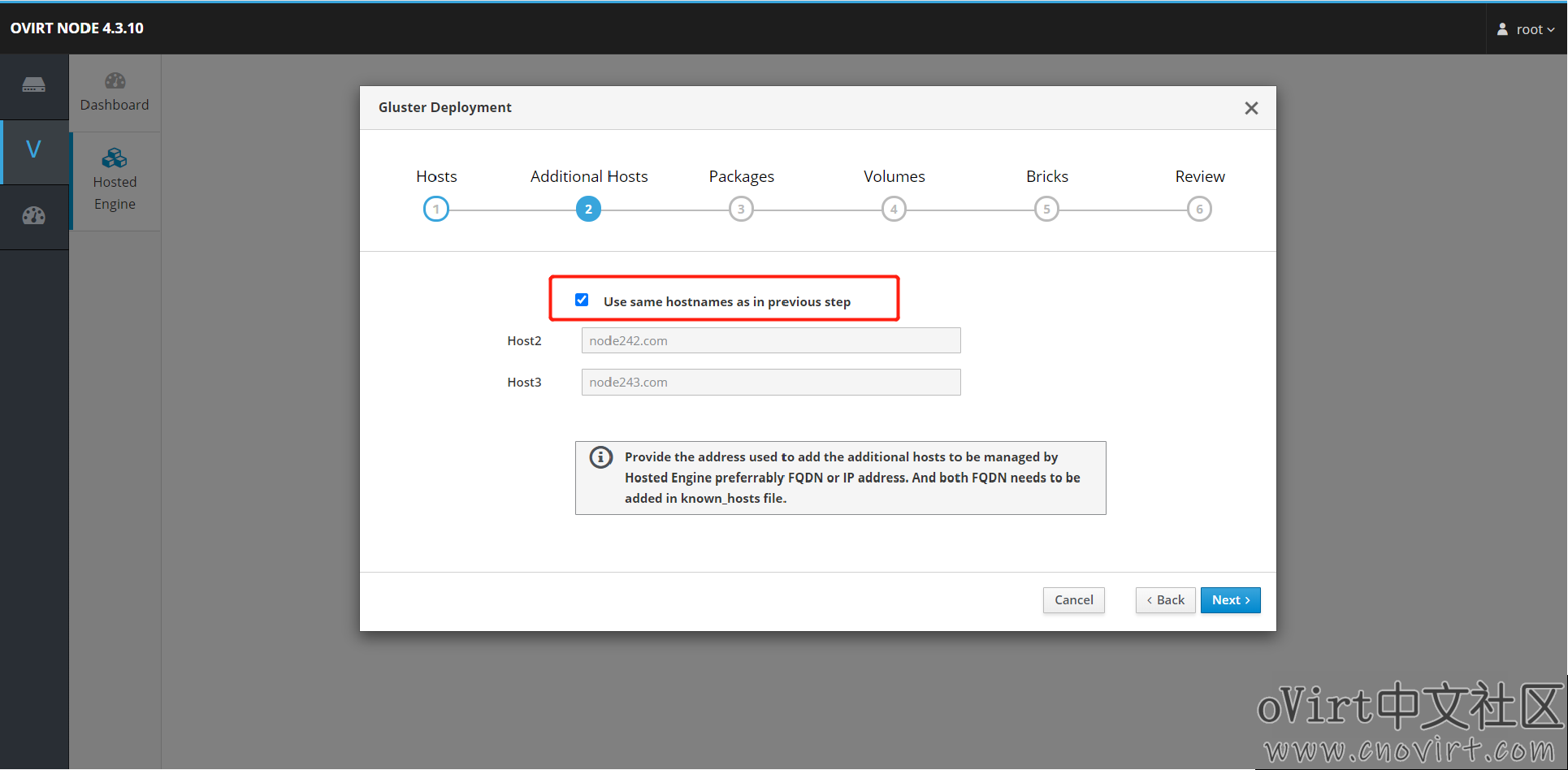

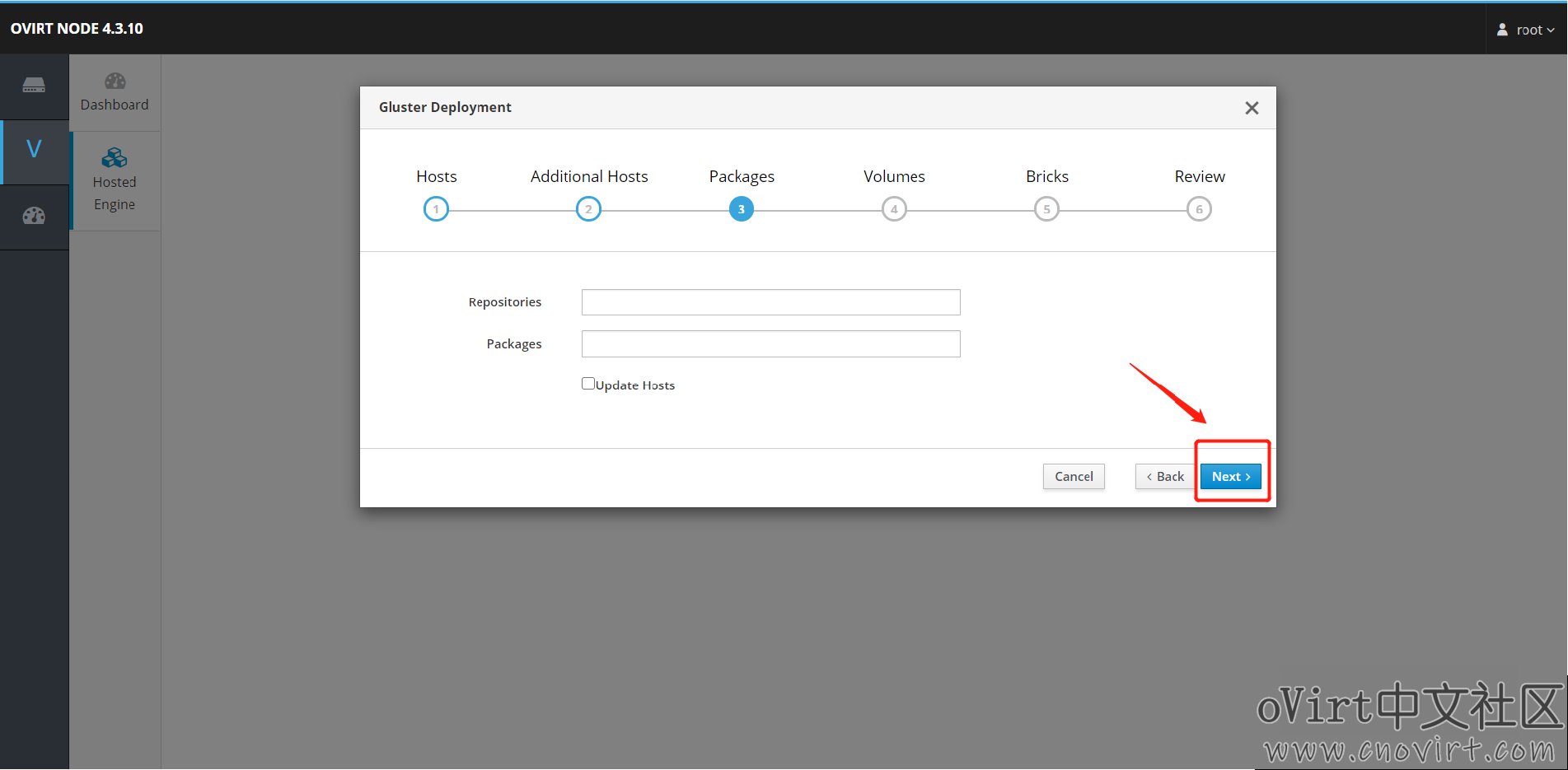

此步不填,直接下一步:

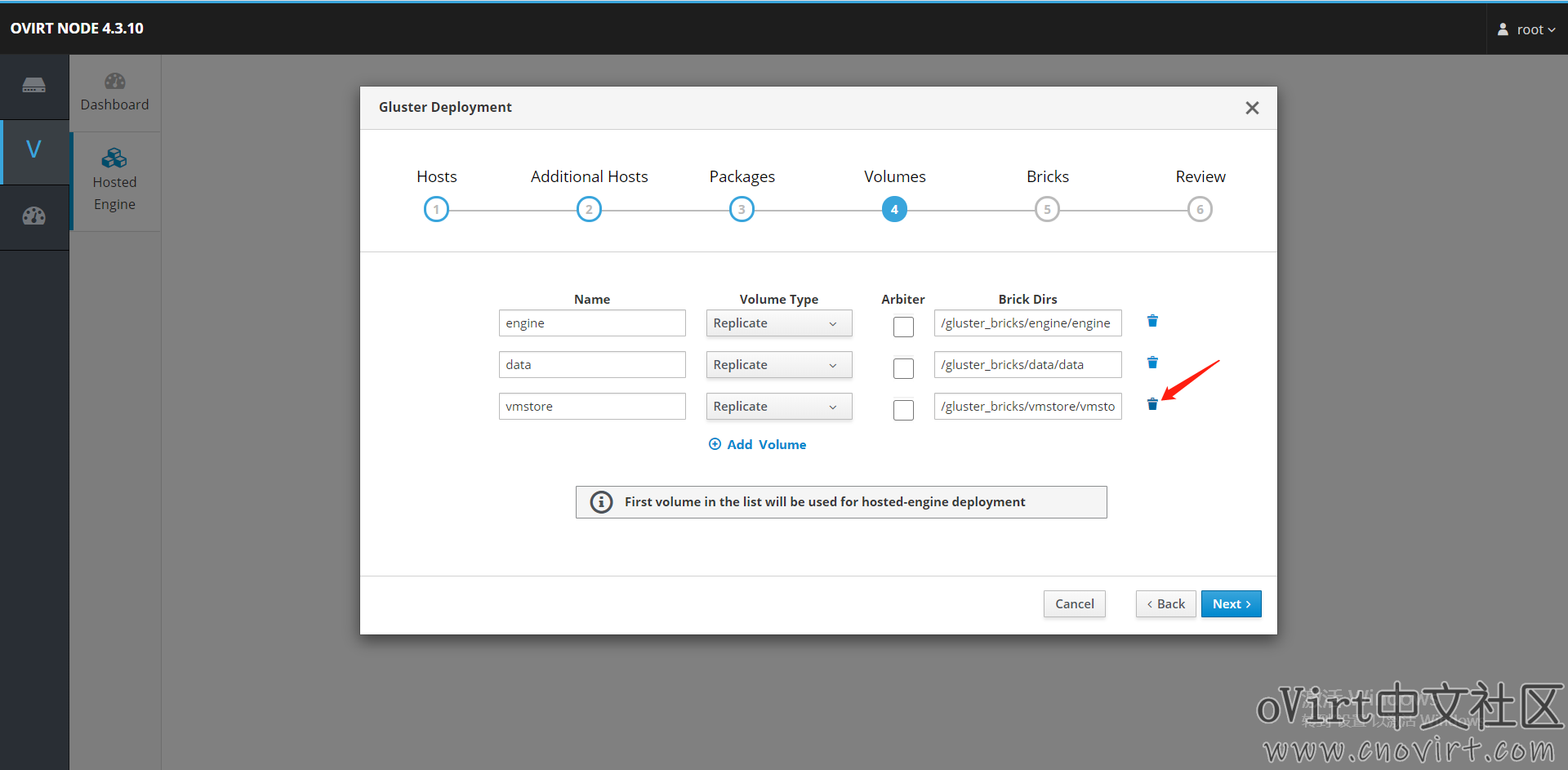

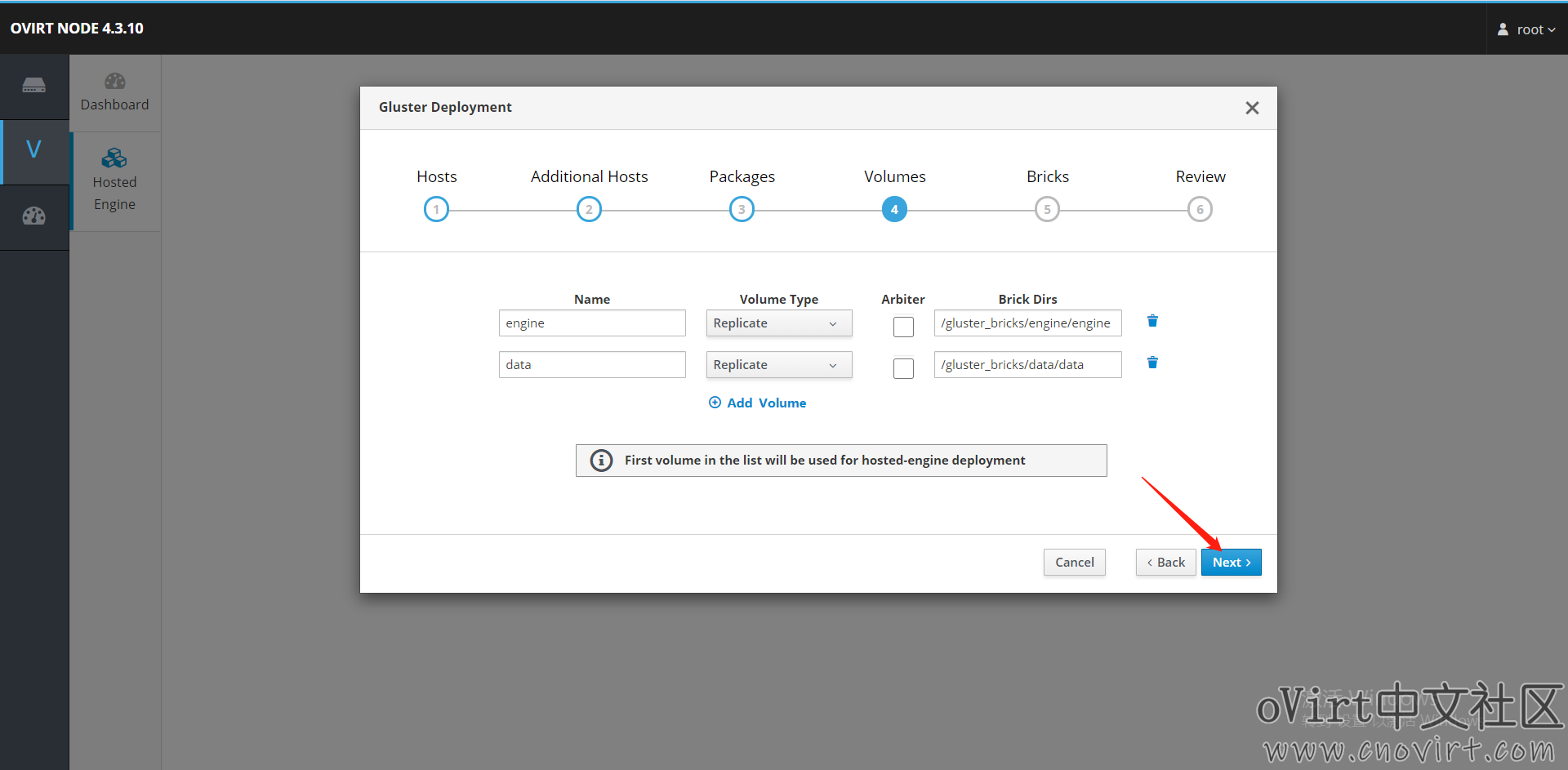

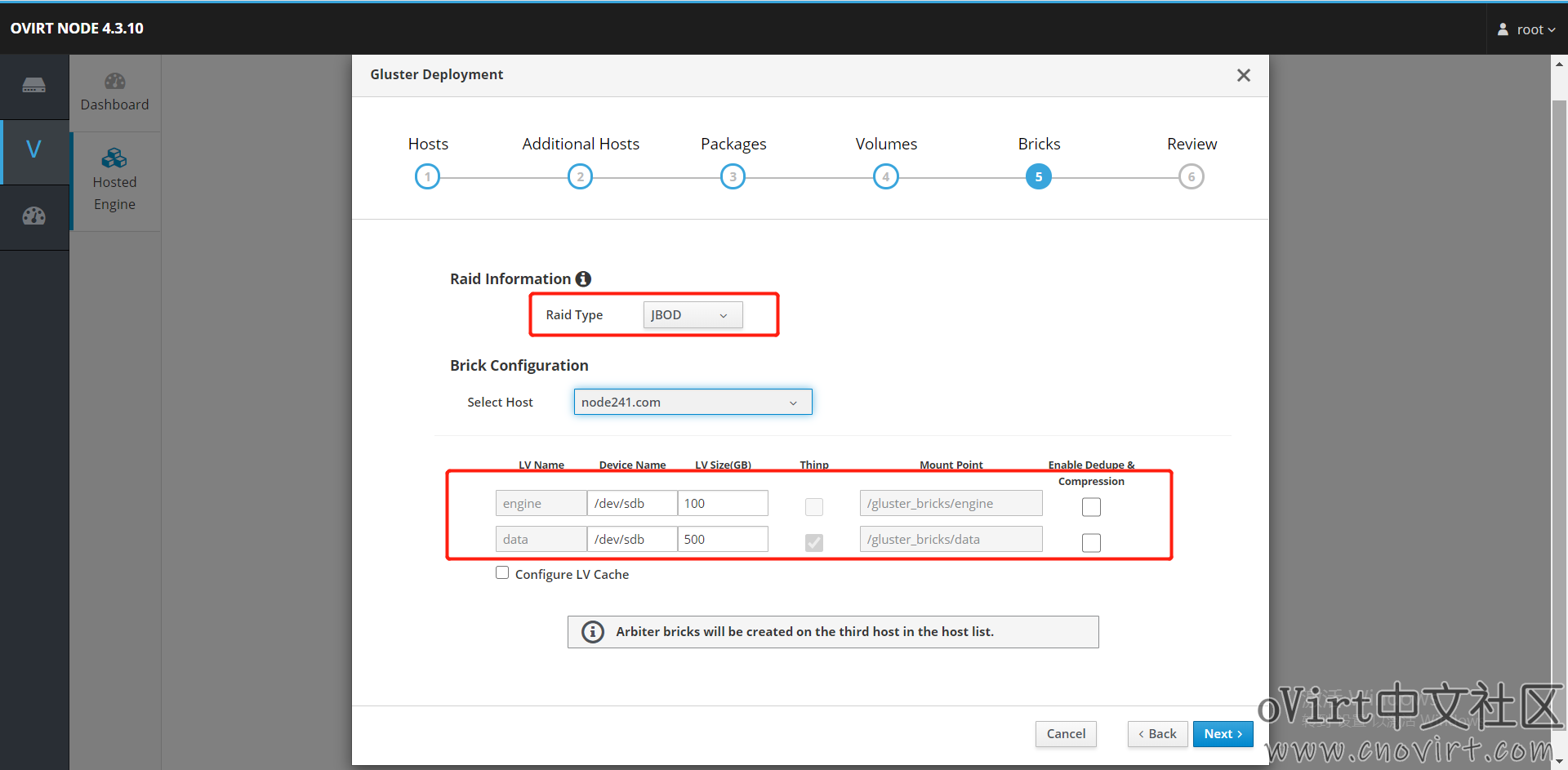

删除掉vmstore这个卷(默认的vmstore是存放虚机系统盘的,默认的data是存放虚机数据盘的,engine是存放hostedengine盘的),这里我们保留engine和vmstore即可:

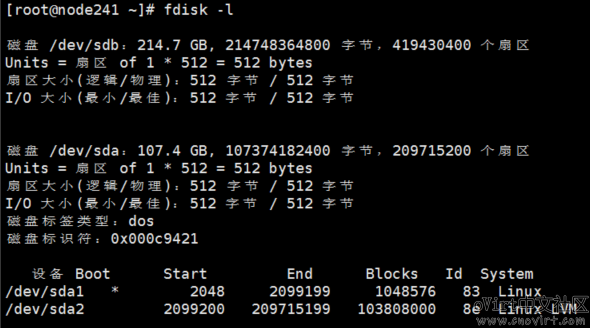

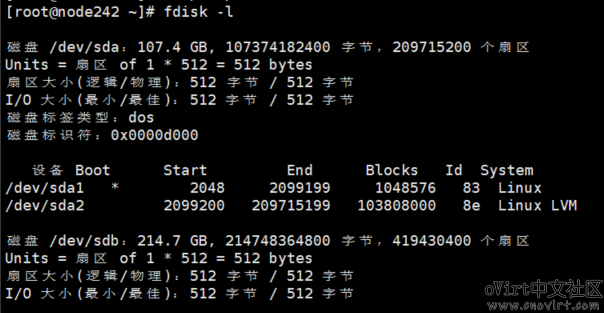

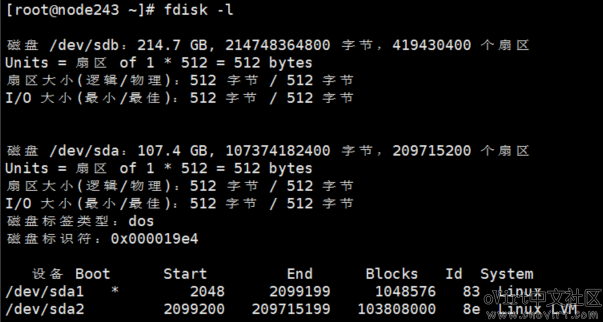

请确认每个node主机上的sda和sdb是对应的,sda已安装系统,sdb为空盘。

确认此处的设备路径和大小正确:

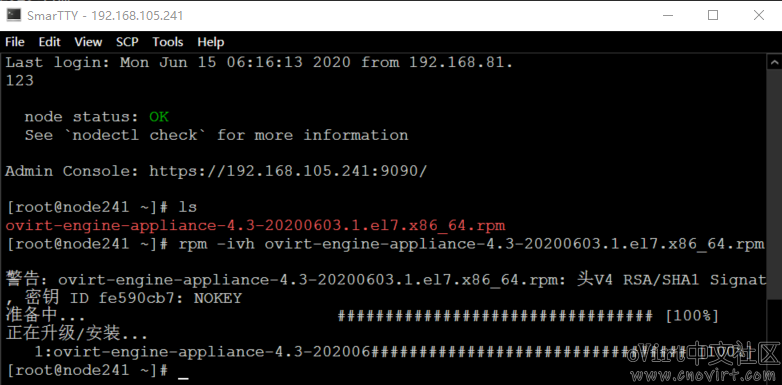

将ovirt-engine的rpm包传输到node241.com这个节点上(推荐使用SmarTTY)并安装:

rpm -ivh ovirt-engine-appliance-4.3-20200603.1.el7.x86_64.rpm

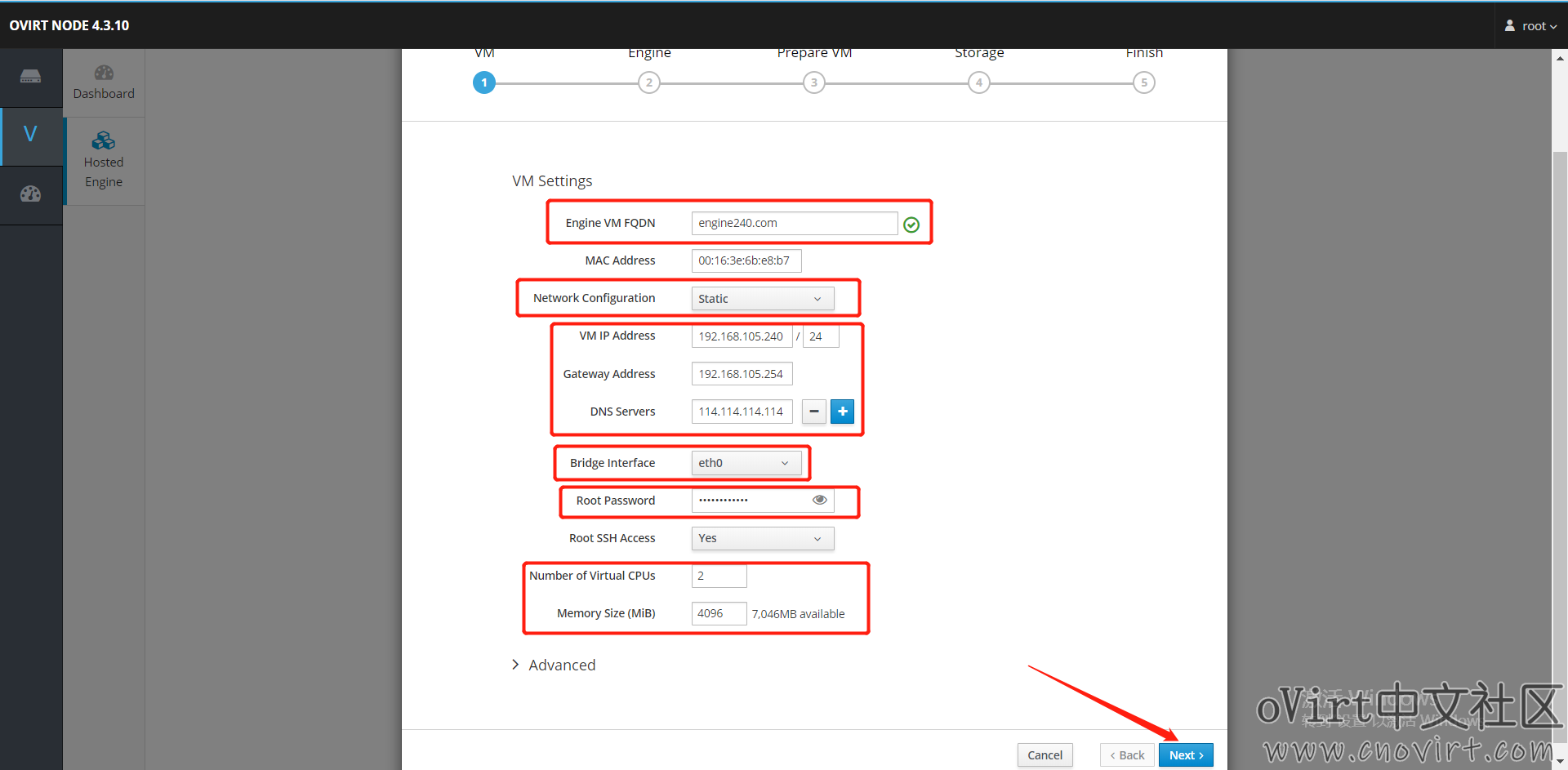

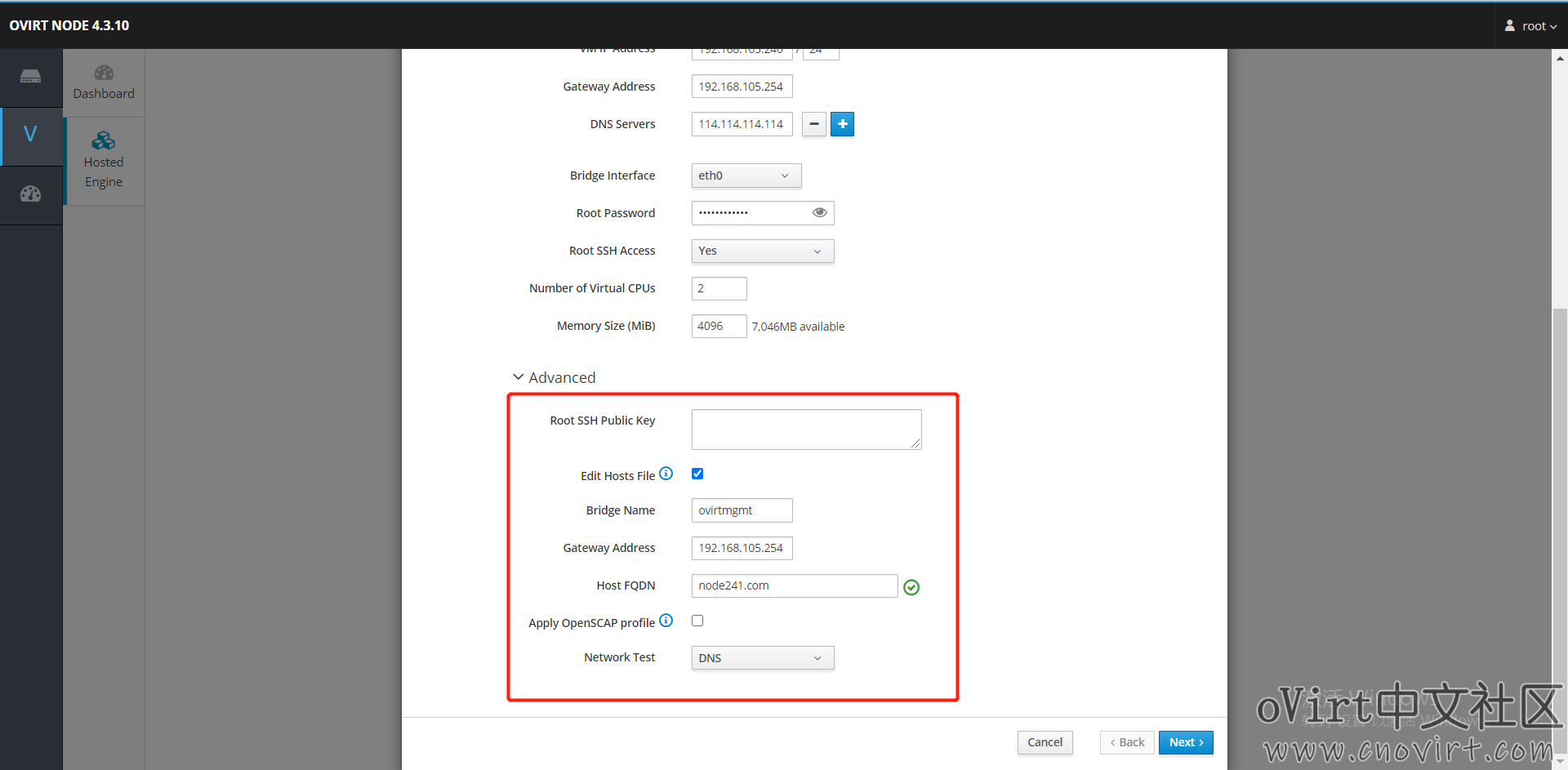

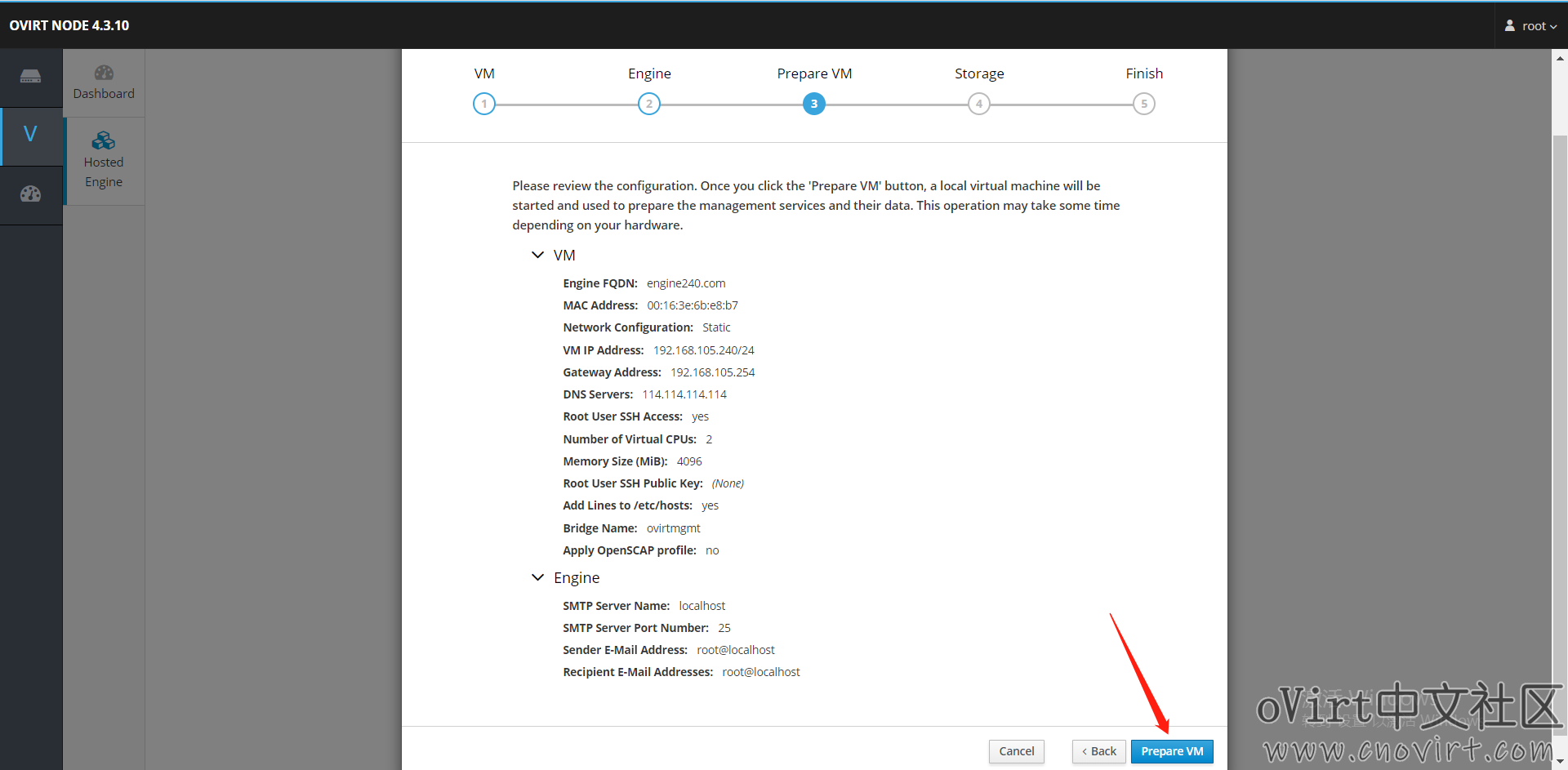

填入engine的配置信息:

CPU2核、内存4G(因为节点总共4核8G,预留一部分资源),engine内存不能低于4G。

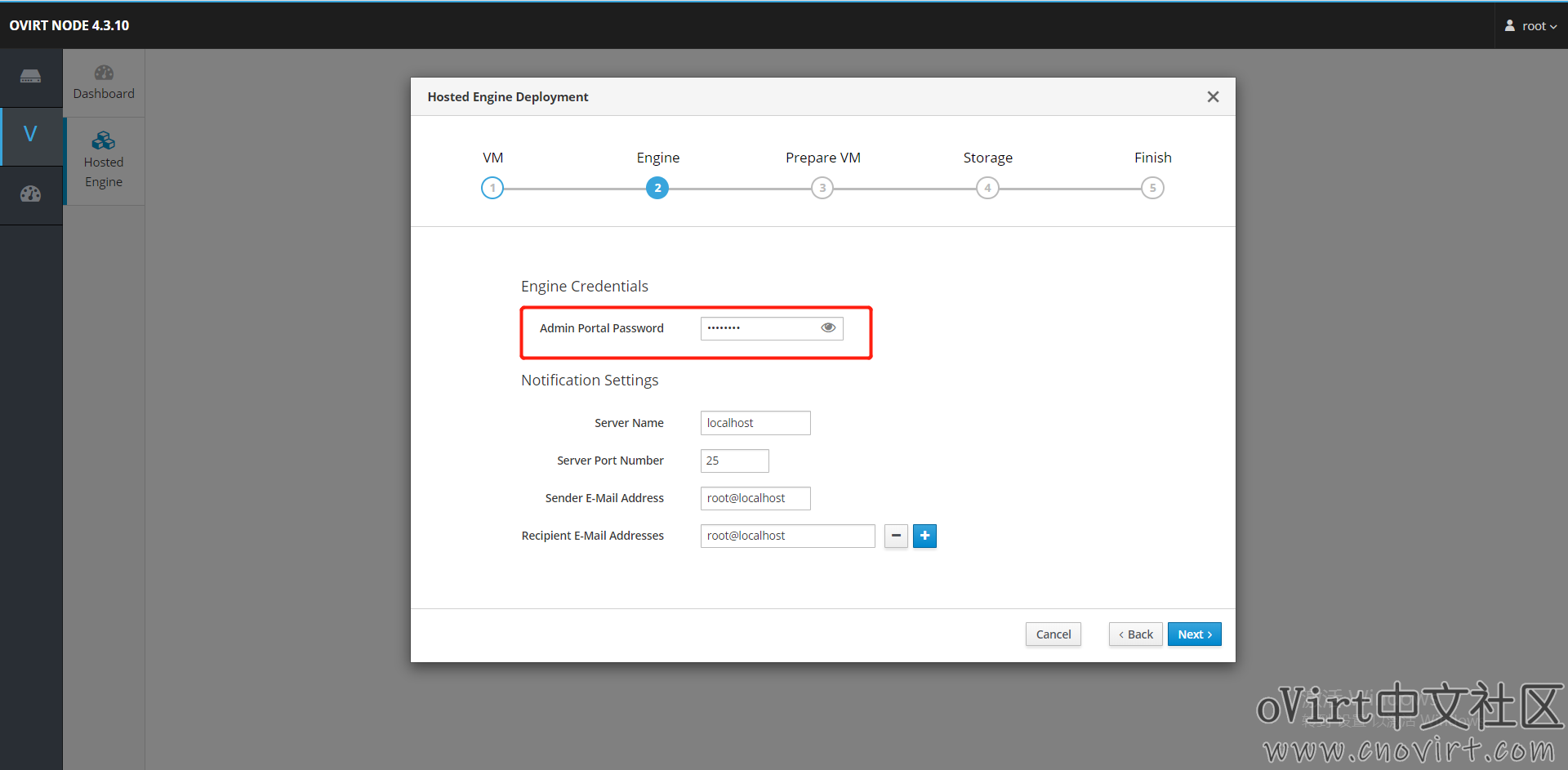

配置admin帐号的密码,admin帐号用于管理门户:

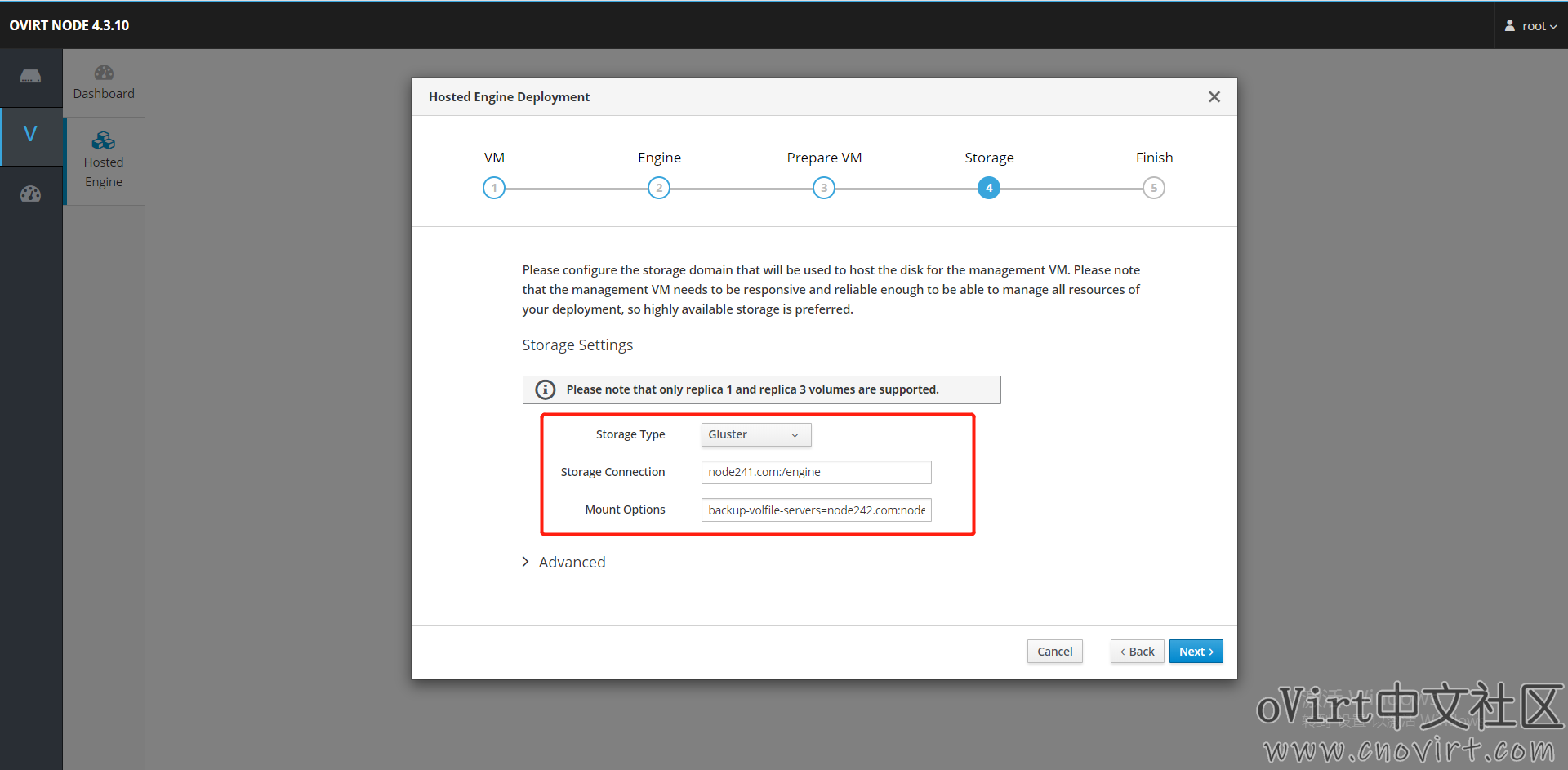

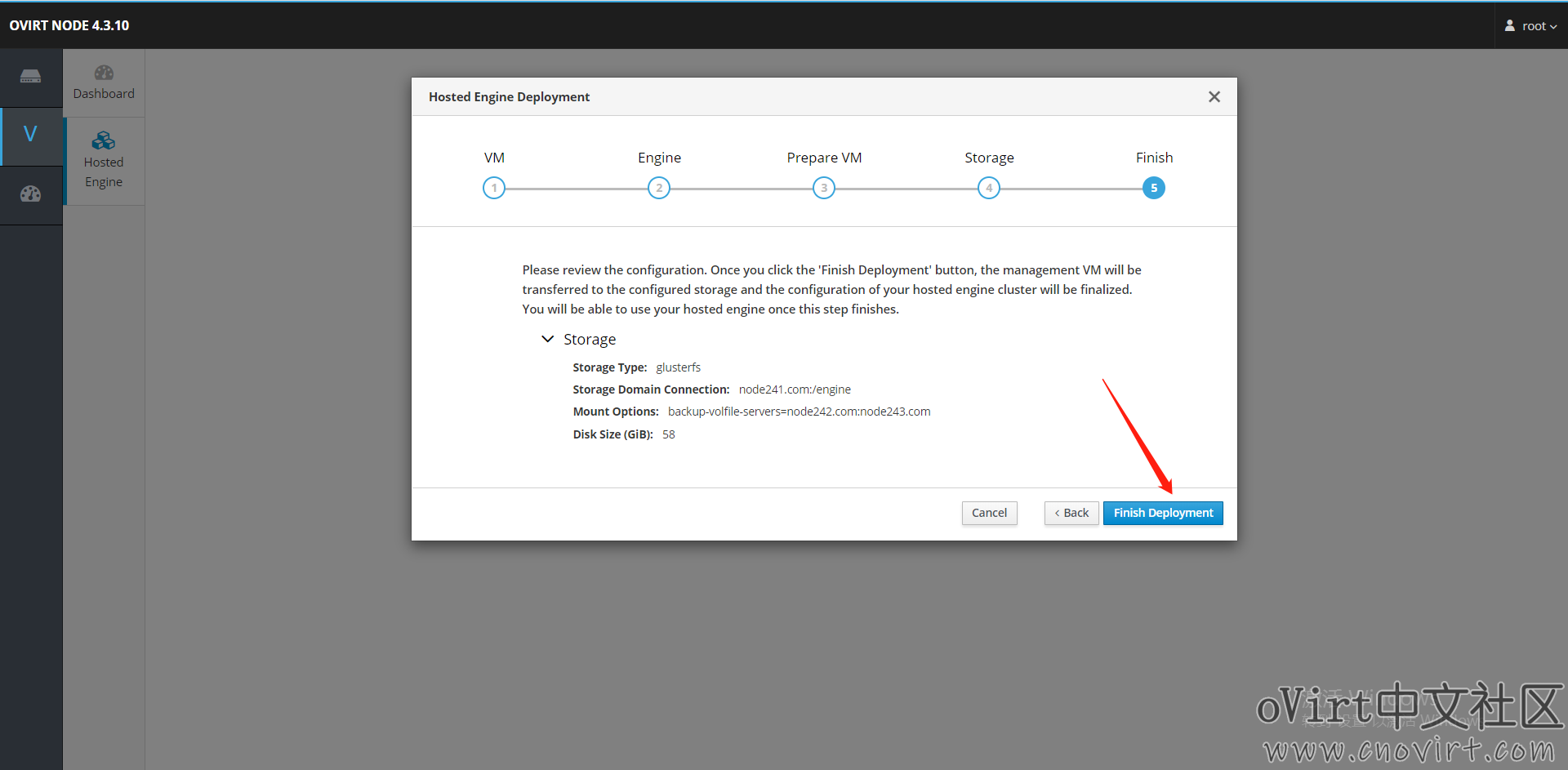

配置HostedEngine存储,存储类型选择Gluster,路径填前面的engine卷路径:node241.com:/engine

挂载参数填:backup-volfile-servers=node242.com:node243.com

(主机域名根据你环境的实际情况填写)

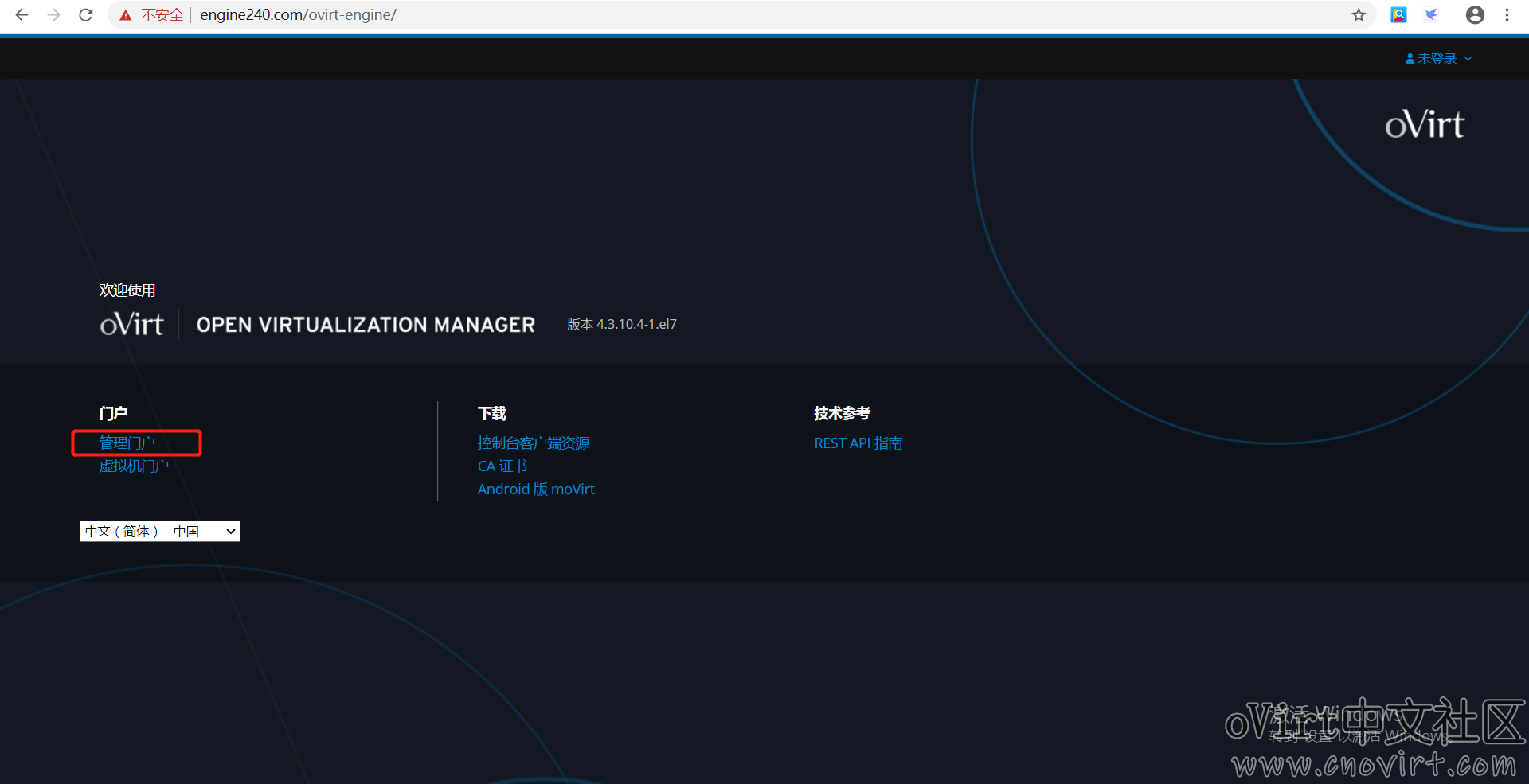

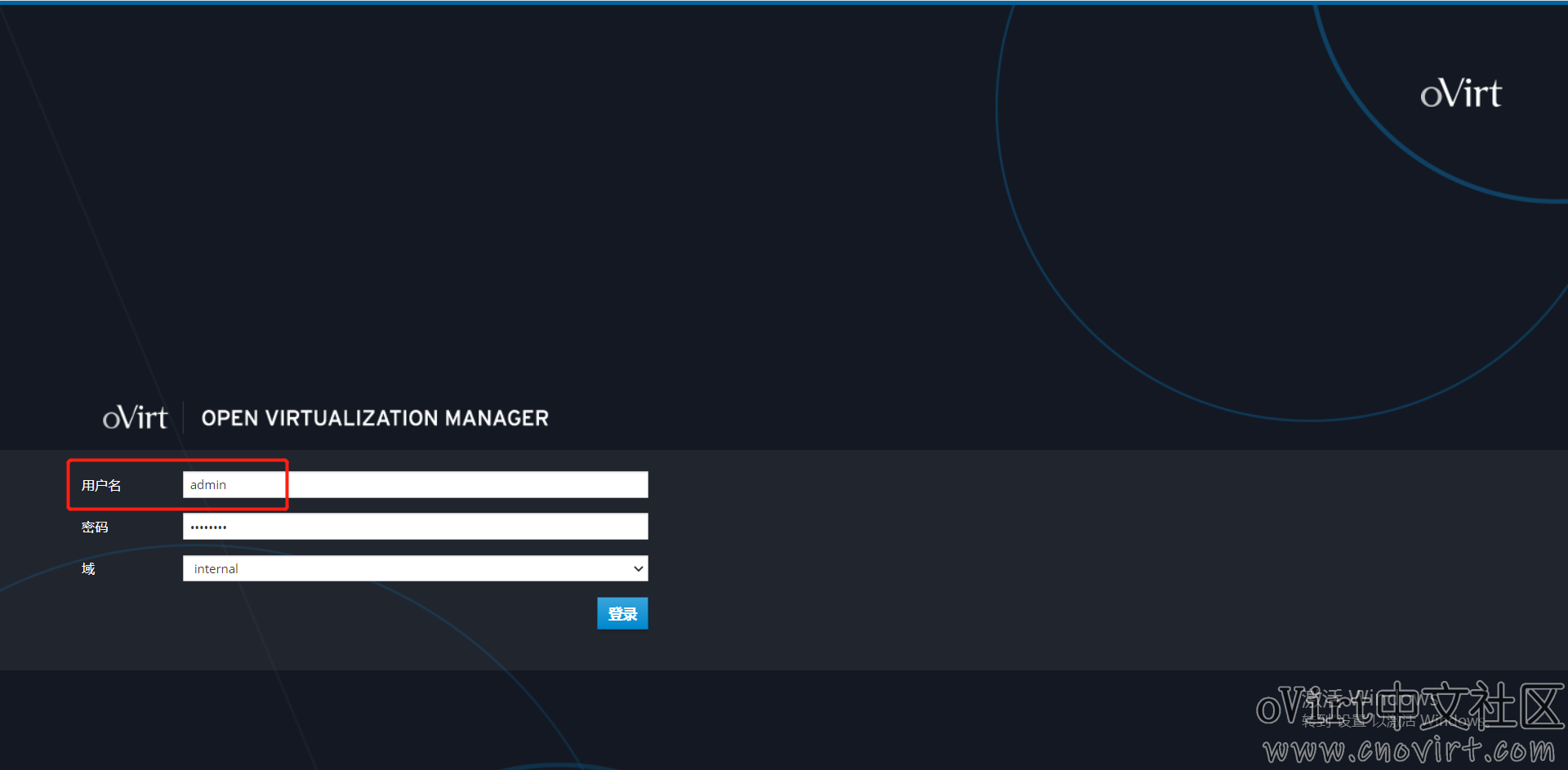

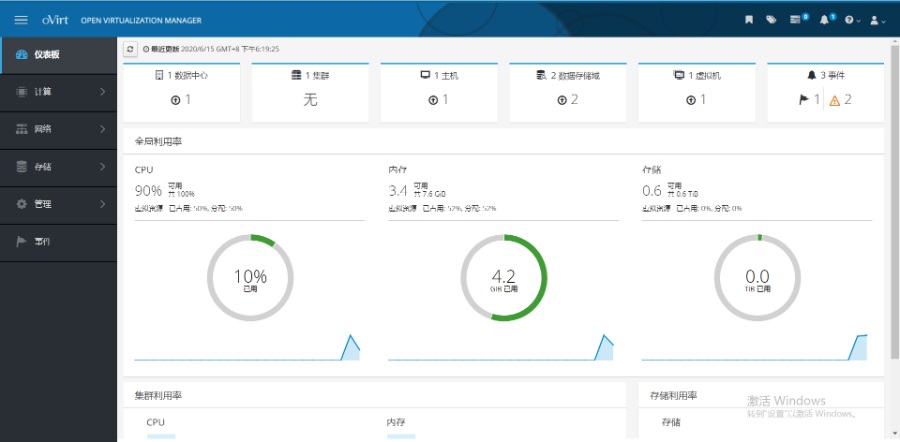

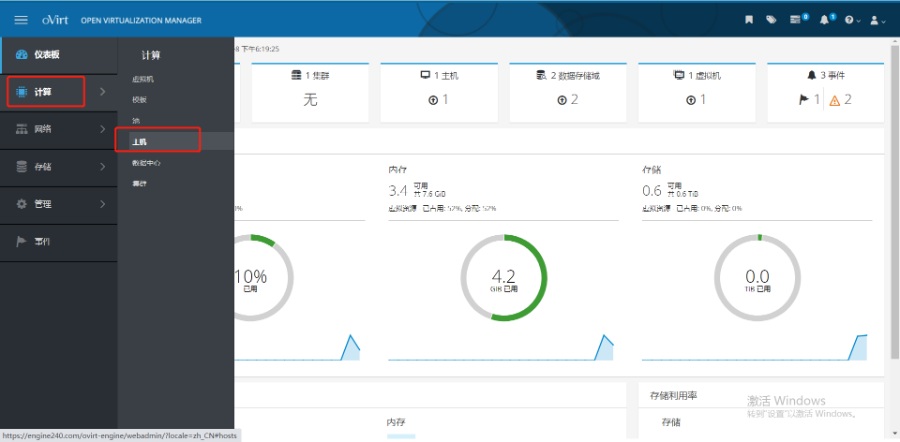

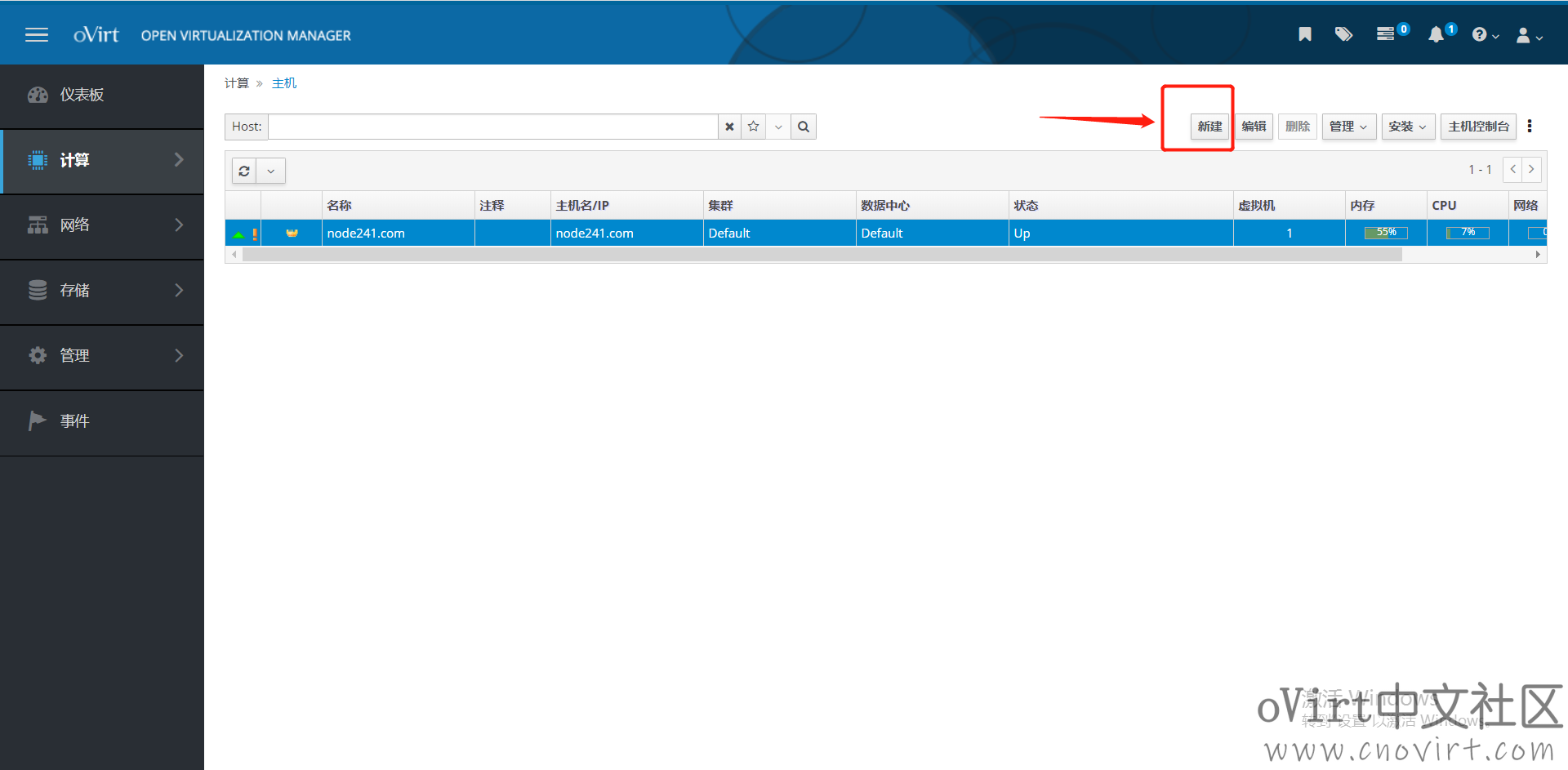

部署过程——访问管理门户

在本机的hosts文件中增加engine的域名映射:

(win10下路径在C:\Windows\System32\drivers\etc,推荐用Notepad++编辑器)

192.168.105.240 engine240.com

使用浏览器访问https://engine240.com

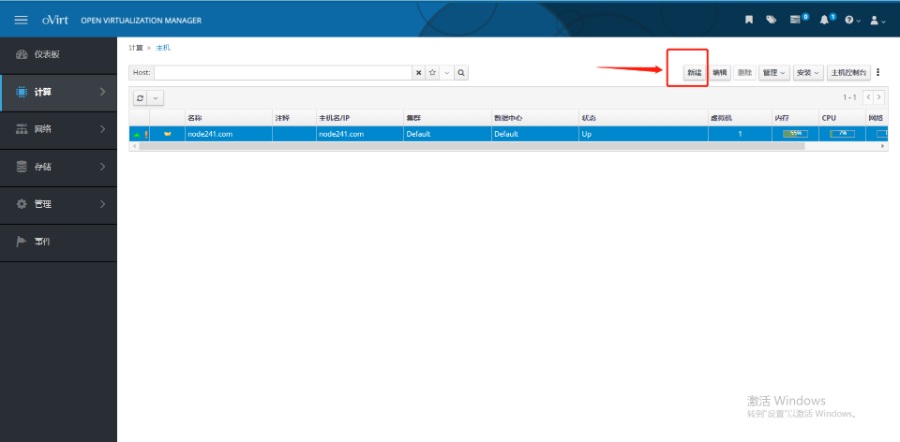

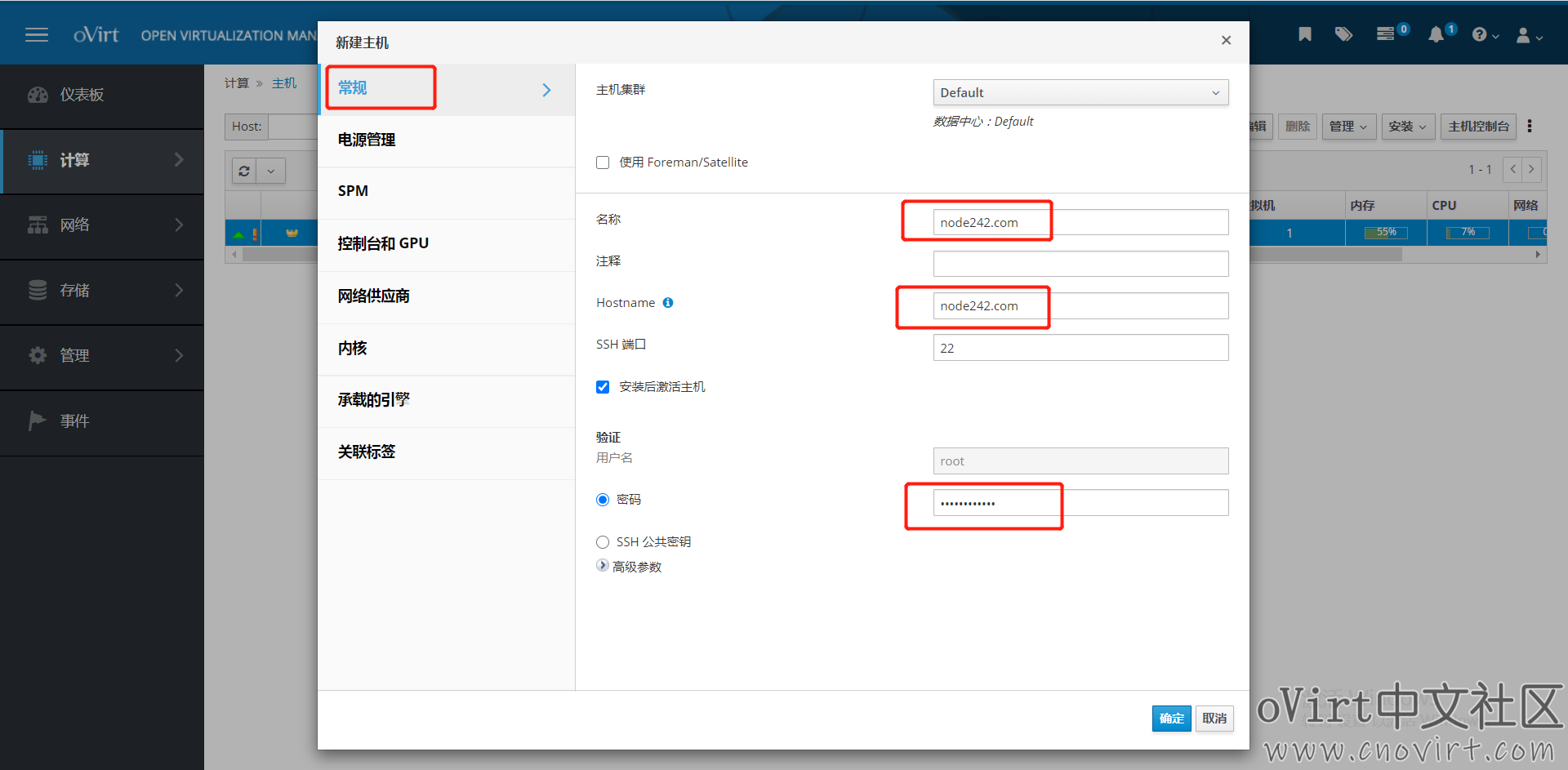

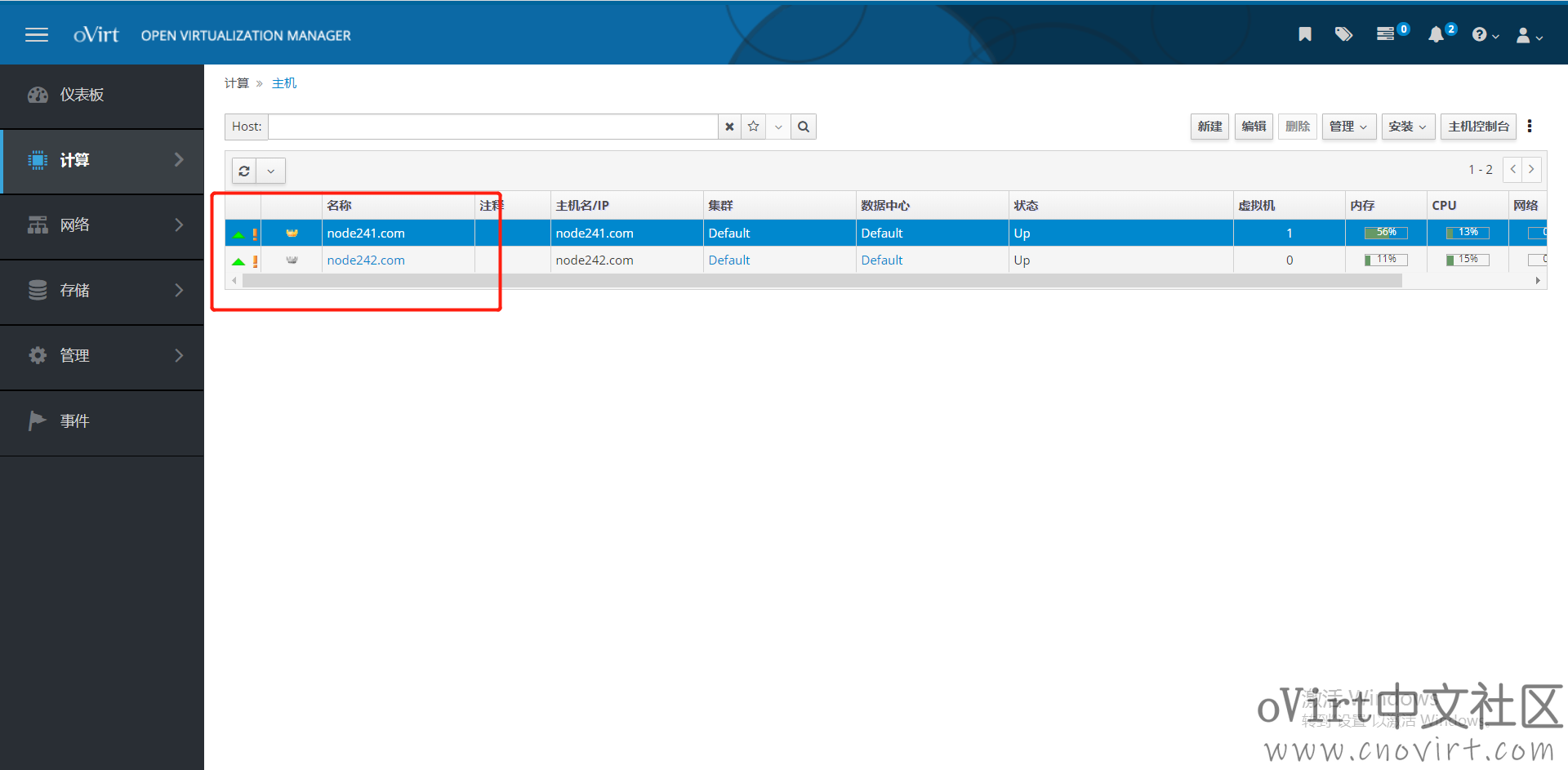

在engine的/etc/hosts中增加要添加的两台node主机节点的域名映射:

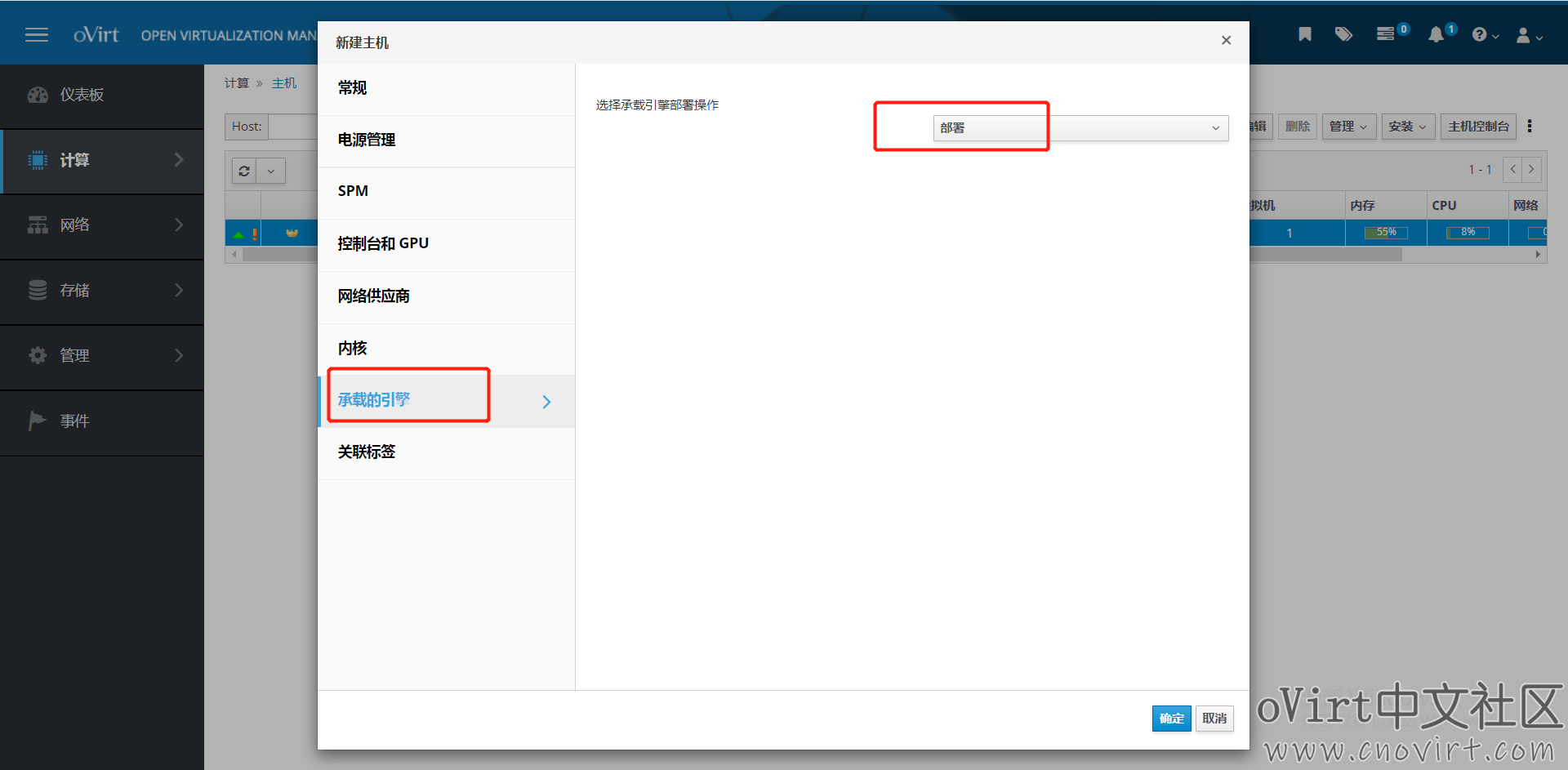

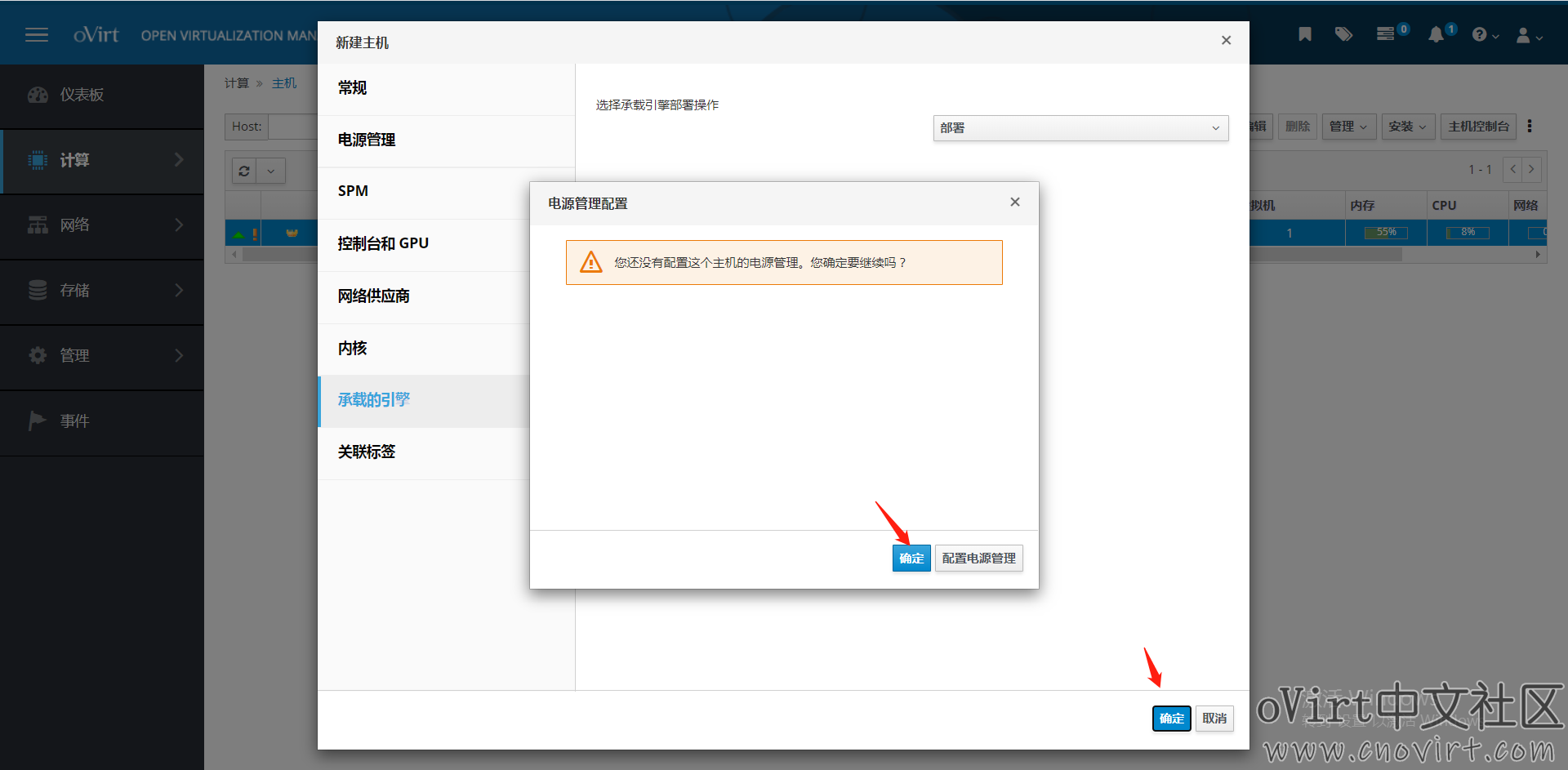

承载的引擎选“部署”:

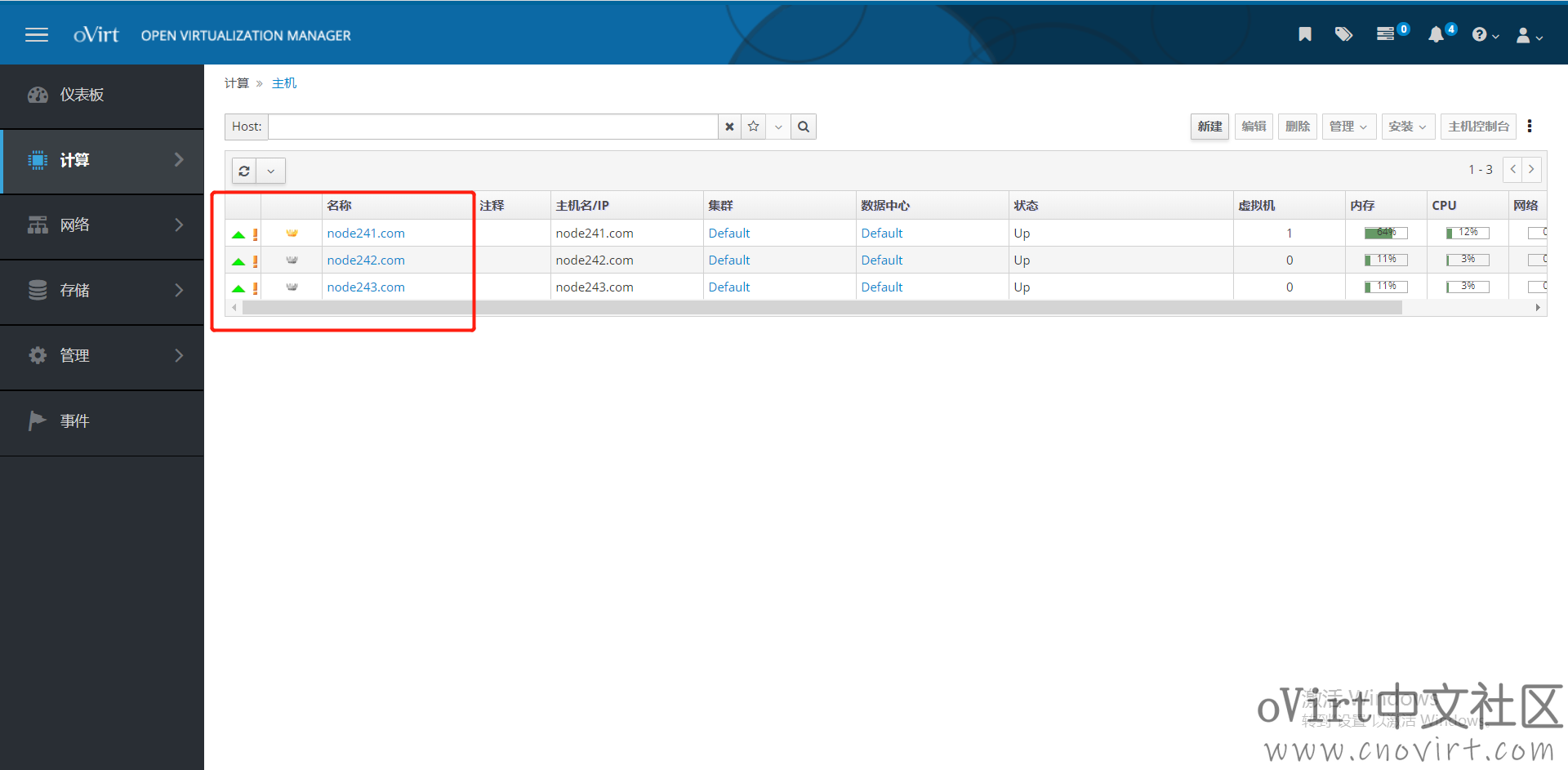

同样的方法添加第三台节点:

PS:转载文章请注明来源:oVirt中文社区(www.cnovirt.com)

扫码?加好友拉你进oVirt技术交流群!

存储和计算可以公用一个ip 是吗?

测试环境里可以,存储网和虚机业务网共用,生产环境需要拆分开。

群主,ovirt-node里面没法创建逻辑卷,

它这个地方在4.3这里是自动创逻辑卷的,不需要你先分出来再用的。

4.4.2这个地方可能有变化。近期我再针对新版本整理个教程。

你现在是两块盘吗,其中一块装系统,另一块做glusterfs存储用,查一下现在磁盘和逻辑卷的情况看看:lsblk

版主我部署发生这样的报错 能帮忙看看吗:

eployment errors: code 505: Host node01.ovirt.com installation failed. Failed to configure management network on the host., code 9000: Failed to verify Power Management configuration for Host node01.ovirt.com

单看这个错误不好说,得结合环境信息和报错日志前后分析

系统是:

10.28.139.65 node01.ovirt.com

10.28.139.66 node02.ovirt.com

10.28.139.67 node03.ovirt.com

10.28.139.162 engine.ovirt.com

已经再这个平面上部署好 gluster 也是用node里面自动部署的

然后资源是非常多的 三台实体服务器

双网口做了team 然后是 主备

我现在把team拆了 直接配置在网口上 定位一下问题

我这里还可以看什么日志吗

日志在执行部署的节点的这个路径下:/var/log/ovirt-hosted-engine-setup

已经解决了 就是网卡组建team 以后的问题 他使用的是teamctl 工具 我现在直接在物理网口上配置ip 就可以完成自动部署engine了,我下次测试使用node portal里面 按钮去组建bond 试试看 会不会有问题。老师有可能也帮忙一起测试一下

ovirt不支持team,支持bond

版主 我创建号 ISO类型的DOMAIN 用的是gluster (lv thin volume),domain状态都是正常的

为啥上传iso文件报错 也不知道怎么PD问提 有没有思路

Last login: Thu Jun 18 16:42:36 2020 from 10.7.129.217

[root@engine ~]# engine-iso-uploader –iso-domain=iso_domain upload /root/CentOS-7-x86_64-Minimal-2003.iso

Please provide the REST API password for the admin@internal oVirt Engine user (CTRL+D to abort):

Uploading, please wait…

INFO: Start uploading /root/CentOS-7-x86_64-Minimal-2003.iso

ERROR: Failed to create dc51b3c0-8ca4-4cda-ba27-27acdb7c3fd8/images/11111111-1111-1111-1111-111111111111//root/CentOS-7-x86_64-Minimal-2003.iso: Success

[root@engine ~]# engine-iso-uploader -i iso_domain upload CentOS-7-x86_64-Minimal-2003.iso

Please provide the REST API password for the admin@internal oVirt Engine user (CTRL+D to abort):

Uploading, please wait…

INFO: Start uploading CentOS-7-x86_64-Minimal-2003.iso

Segmentation fault

从管理台上直接上传试试?存储->磁盘->上传。

这个是用来上传硬盘镜像文件的方式吧 我是上传iso文件,用来光驱引导后 手动安装系统

iso也可以在这里上传;

另外也可以建个iso域,将iso直接拷贝到iso域目录中;

为什么我手工创建了ISO类型的 Domain 也正常状态 在您说的存储->磁盘->上传 时候 参数窗口 disk type就只能选择data类型 这是哪里还需要添加配置吗?

disk type?没有这个选项呀,ovirt哪个版本

我写错了 是磁盘配置集 这个是哪里配的

为什么我手工创建了ISO类型的 Domain 也正常状态 在您说的存储->磁盘->上传 时候 参数窗口 disk profile(磁盘配置集)就只能选择data类型 这是哪里还需要添加配置吗?

不用管disk profile这一项

成功了 单这样和是用ISO类型的domain有什么用呢 ?

我查看官方文档有这么一句话:

ISO Domain: ISO domains store ISO files (or logical CDs) used to install and boot operating systems and applications for the virtual machines. An ISO domain removes the data center’s need for physical media. An ISO domain can be shared across different data centers. ISO domains can only be NFS-based. Only one ISO domain can be added to a data center.

ISO domain 只能支持 nfs 但是其实我们在管理台创建时候 是允许我们选择GlusterFS的 真是奇怪

建议用nfs就可以了,超融合环境下,现在直接上传到数据域吧,也不用建iso域了,官方已经要把iso域抛弃了…

好的 以后就直接使用data域了 我用nas对接ISO域后 engine-iso-uploader 命令就可以执行了。

请问我想给虚拟机添加一个NAT网络 或者内部网络 如何再ovrit里面配置

oVirt网络走的是linux bridge,不支持nat

自己配子网的地址即可,互通需要在外面物理交换机上做配置

那请问 ovs 有啥作用啊?

[root@node01 vdsm]# ovs-vsctl show

fa3ae4ec-5a72-4689-a21c-c72d34148468

Bridge br-int

fail_mode: secure

Port br-int

Interface br-int

type: internal

Port “ovn-a05a02-0”

Interface “ovn-a05a02-0″

type: geneve

options: {csum=”true”, key=flow, remote_ip=”10.28.139.67″}

Port “ovn-4c9366-0”

Interface “ovn-4c9366-0″

type: geneve

options: {csum=”true”, key=flow, remote_ip=”10.28.139.66″}

ovs_version: “2.11.0”

ovs作为技术预览功能,不建议用,另外虽然支持ovs,但没有提供操作接口

如果想搭建复杂的网络环境的话,可以用openstack neutron作为外部网络导入到ovirt中使用

实际上默认的bridge配合vlan应该就能满足一般的场景了

好的

大神能不能帮忙普及一次 External Network Providers 的主题 并且搞个实验

安排,得空…

大佬,我用workstation部署三节点的ovirt-node后,在部署存储的时爆出如下错误,我的各个node节点状态正常,每个节点时两块硬盘,100G作系统,自动分区,200G做存储,无任何数据也没有格式化分区,就是直接添加到系统里的,具体报错信息如下

TASK [gluster.infra/roles/backend_setup : Get the UUID of the devices] *********

failed: [linuxwt30.com] (item=sdb) => {“ansible_loop_var”: “item”, “changed”: true, “cmd”: “multipath -a /dev/sdb”, “delta”: “0:00:00.017863”, “end”: “2020-12-29 01:14:03.432997”, “item”: “sdb”, “msg”: “non-zero return code”, “rc”: 1, “start”: “2020-12-29 01:14:03.415134”, “stderr”: “Dec 29 01:14:03 | sdb: failed to get udev uid: Invalid argument\nDec 29 01:14:03 | sdb: failed to get sysfs uid: Invalid argument\nDec 29 01:14:03 | sdb: failed to get sgio uid: No such file or directory”, “stderr_lines”: [“Dec 29 01:14:03 | sdb: failed to get udev uid: Invalid argument”, “Dec 29 01:14:03 | sdb: failed to get sysfs uid: Invalid argument”, “Dec 29 01:14:03 | sdb: failed to get sgio uid: No such file or directory”], “stdout”: “”, “stdout_lines”: []}

TASK [gluster.infra/roles/backend_setup : Add wwid to blacklist in blacklist.conf file] ***

skipping: [linuxwt30.com] => (item={‘msg’: ‘non-zero return code’, ‘cmd’: ‘multipath -a /dev/sdb’, ‘stdout’: ”, ‘stderr’: ‘Dec 29 01:14:03 | sdb: failed to get udev uid: Invalid argument\nDec 29 01:14:03 | sdb: failed to get sysfs uid: Invalid argument\nDec 29 01:14:03 | sdb: failed to get sgio uid: No such file or directory’, ‘rc’: 1, ‘start’: ‘2020-12-29 01:14:03.415134’, ‘end’: ‘2020-12-29 01:14:03.432997’, ‘delta’: ‘0:00:00.017863’, ‘changed’: True, ‘failed’: True, ‘invocation’: {‘module_args’: {‘_raw_params’: ‘multipath -a /dev/sdb’, ‘_uses_shell’: True, ‘warn’: True, ‘stdin_add_newline’: True, ‘strip_empty_ends’: True, ‘argv’: None, ‘chdir’: None, ‘executable’: None, ‘creates’: None, ‘removes’: None, ‘stdin’: None}}, ‘stdout_lines’: [], ‘stderr_lines’: [‘Dec 29 01:14:03 | sdb: failed to get udev uid: Invalid argument’, ‘Dec 29 01:14:03 | sdb: failed to get sysfs uid: Invalid argument’, ‘Dec 29 01:14:03 | sdb: failed to get sgio uid: No such file or directory’], ‘item’: ‘sdb’, ‘ansible_loop_var’: ‘item’})

skipping: [linuxwt31.com] => (item={‘msg’: ‘non-zero return code’, ‘cmd’: ‘multipath -a /dev/sdb’, ‘stdout’: ”, ‘stderr’: ‘Dec 29 01:14:03 | sdb: failed to get udev uid: Invalid argument\nDec 29 01:14:03 | sdb: failed to get sysfs uid: Invalid argument\nDec 29 01:14:03 | sdb: failed to get sgio uid: No such file or directory’, ‘rc’: 1, ‘start’: ‘2020-12-29 01:14:03.515774’, ‘end’: ‘2020-12-29 01:14:03.535799’, ‘delta’: ‘0:00:00.020025’, ‘changed’: True, ‘failed’: True, ‘invocation’: {‘module_args’: {‘_raw_params’: ‘multipath -a /dev/sdb’, ‘_uses_shell’: True, ‘warn’: True, ‘stdin_add_newline’: True, ‘strip_empty_ends’: True, ‘argv’: None, ‘chdir’: None, ‘executable’: None, ‘creates’: None, ‘removes’: None, ‘stdin’: None}}, ‘stdout_lines’: [], ‘stderr_lines’: [‘Dec 29 01:14:03 | sdb: failed to get udev uid: Invalid argument’, ‘Dec 29 01:14:03 | sdb: failed to get sysfs uid: Invalid argument’, ‘Dec 29 01:14:03 | sdb: failed to get sgio uid: No such file or directory’], ‘item’: ‘sdb’, ‘ansible_loop_var’: ‘item’})

skipping: [linuxwt32.com] => (item={‘msg’: ‘non-zero return code’, ‘cmd’: ‘multipath -a /dev/sdb’, ‘stdout’: ”, ‘stderr’: ‘Dec 29 01:14:04 | sdb: failed to get udev uid: Invalid argument\nDec 29 01:14:04 | sdb: failed to get sysfs uid: Invalid argument\nDec 29 01:14:04 | sdb: failed to get sgio uid: No such file or directory’, ‘rc’: 1, ‘start’: ‘2020-12-29 01:14:04.805236’, ‘end’: ‘2020-12-29 01:14:04.835129’, ‘delta’: ‘0:00:00.029893’, ‘changed’: True, ‘failed’: True, ‘invocation’: {‘module_args’: {‘_raw_params’: ‘multipath -a /dev/sdb’, ‘_uses_shell’: True, ‘warn’: True, ‘stdin_add_newline’: True, ‘strip_empty_ends’: True, ‘argv’: None, ‘chdir’: None, ‘executable’: None, ‘creates’: None, ‘removes’: None, ‘stdin’: None}}, ‘stdout_lines’: [], ‘stderr_lines’: [‘Dec 29 01:14:04 | sdb: failed to get udev uid: Invalid argument’, ‘Dec 29 01:14:04 | sdb: failed to get sysfs uid: Invalid argument’, ‘Dec 29 01:14:04 | sdb: failed to get sgio uid: No such file or directory’], ‘item’: ‘sdb’, ‘ansible_loop_var’: ‘item’})

你好 三台服务器部署的话 第三个仲裁节点不占用很大空间的吗?

可以采用2+1模式(即1台做仲裁),也可以不用仲裁(即3台平等);

用仲裁节点的话,仲裁节点只会存放少量的结构数据,所有数据总占用空间相当于2副本;

不用仲裁的话,3台占用的空间基本相同;

不用仲裁的话 数据有没有风险?

用仲裁是为了节省空间(2副本+1仲裁)

不用仲裁是3副本,相对来讲安全系数更高些

按照文档操作 报如下操作 硬盘是单独的磁盘,是不是需要先格式化下才行

ailed: [node4.com] (item={‘key’: ‘gluster_vg_sdb’, ‘value’: [{‘vgname’: u’gluster_vg_sdb’, ‘pvname’: u’/dev/sdb’}]}) => {“ansible_loop_var”: “item”, “changed”: false, “err”: ” Device /dev/sdb excluded by a filter.\n”, “item”: {“key”: “gluster_vg_sdb”, “value”: [{“pvname”: “/dev/sdb”, “vgname”: “gluster_vg_sdb”}]}, “msg”: “Creating physical volume ‘/dev/sdb’ failed”, “rc”: 5}

failed: [node3.com] (item={‘key’: ‘gluster_vg_sdc’, ‘value’: [{‘vgname’: u’gluster_vg_sdc’, ‘pvname’: u’/dev/sdc’}]}) => {“ansible_loop_var”: “item”, “changed”: false, “err”: ” Device /dev/sdc excluded by a filter.\n”, “item”: {“key”: “gluster_vg_sdc”, “value”: [{“pvname”: “/dev/sdc”, “vgname”: “gluster_vg_sdc”}]}, “msg”: “Creating physical volume ‘/dev/sdc’ failed”, “rc”: 5}

failed: [node2.com] (item={‘key’: u’gluster_vg_sdc’, ‘value’: [{‘vgname’: u’gluster_vg_sdc’, ‘pvname’: u’/dev/sdc’}]}) => {“ansible_loop_var”: “item”, “changed”: false, “err”: ” Device /dev/sdc excluded by a filter.\n”, “item”: {“key”: “gluster_vg_sdc”, “value”: [{“pvname”: “/dev/sdc”, “vgname”: “gluster_vg_sdc”}]}, “msg”: “Creating physical volume ‘/dev/sdc’ failed”, “rc”: 5}

failed: [node4.com] (item={‘key’: ‘gluster_vg_sdc’, ‘value’: [{‘vgname’: u’gluster_vg_sdc’, ‘pvname’: u’/dev/sdc’}]}) => {“ansible_loop_var”: “item”, “changed”: false, “err”: ” Device /dev/sdc excluded by a filter.\n”, “item”: {“key”: “gluster_vg_sdc”, “value”: [{“pvname”: “/dev/sdc”, “vgname”: “gluster_vg_sdc”}]}, “msg”: “Creating physical volume ‘/dev/sdc’ failed”, “rc”: 5}

硬盘需要先格式化下,部署之前上面不能有分区

群主还是不行,我是在界面上操作如下图。

报错如下;

TASK [Check if /var/log has enough disk space] *********************************# 这个提示空间不足?我是按照要求装的分区

task path: /usr/share/cockpit/ovirt-dashboard/ansible/hc_wizard.yml:38

skipping: [node3.com] => {“changed”: false, “skip_reason”: “Conditional result was False”}

skipping: [node2.com] => {“changed”: false, “skip_reason”: “Conditional result was False”}

skipping: [node4.com] => {“changed”: false, “skip_reason”: “Conditional result was False”}

TASK [Check if the /var is greater than 15G] *********************************** #必须大于15G?

task path: /usr/share/cockpit/ovirt-dashboard/ansible/hc_wizard.yml:43

skipping: [node3.com] => {“changed”: false, “skip_reason”: “Conditional result was False”}

skipping: [node2.com] => {“changed”: false, “skip_reason”: “Conditional result was False”}

skipping: [node4.com] => {“changed”: false, “skip_reason”: “Conditional result was False”}

TASK [Check if disks have logical block size of 512B] ************************** #这个是需要mkfs.xfs -i size=512 /dev/sdx 对应格式化吗

你这个磁盘是多大的呀,都配大点,安装node的时候系统盘让它自己分区就可以,数据盘清空一下。

盘都是4T 系统盘就是按照默认装的。数据盘也是一样的。

✗捂脸✗ 按正常路子来应该是没问题的,不确定你这个是啥情况…

已经解决了,还是硬盘分区问题

modprobe dm-multipath

multipath -F

参考:

http://www.cnovirt.com/archives/956

请问多路径怎么回事?我和你遇到了一样的问题。

没有多路径模块吗?

谢谢,我百度了,你的命令,明白了怎么回事。

怎么解决的

如果5节点部署,选择多少个节点为engine的承载节点好?还是说5个节点都可以部署成engine的承载节点?

超融合架构部署的话只能3节点起步,3节点扩容,按官方说法可以扩到12节点;

如果节点数少的话建议都能够承载engine,对于engine的承载节点官方的限制是7个。

好的!受益了!!

3个节点都只有一块磁盘要怎样配置

每节点至少要有两块盘

关于默认创建的卷,之前文章中描述错了,我刚发现,已经修正!

群主,创建虚拟机的时候,发现很慢,提示launching vm xx 失败,有的时候通过vnc查看发现虚拟机安装的直接卡死。

另外节点和engine 防火墙要关闭吗

慢可能是配置有点低了;

如果是用的node iso装的主机节点的话,不需要管防火墙,默认就好

群主,glusterfs 适合做虚拟机磁盘存储吗,ovirt 推荐用gfs 和ceph rdb比性能还是有点差,同样环境对吧proxmox ceph 存储 读写速度就比ovirt要好,有什么优化空间吗

希望群主能分享下 一些生产案例

性能还得看是哪种类型的应用场景吧,跑vdi还是跑业务系统,业务系统的读写是哪种类型的,gluster和ceph各有优劣吧,与配置方案也有很大关系,性能也各有所长吧,gluster相对简单些

群主,我搭好3节点环境后测试,发现engine所在的主机下电后,engine无法在其它主机上自动启动,导致平台无法使用,得手动操作恢复,请问有解决办法吗?

三台主机承载的引擎那里是否都配成了“部署”?

都配置的,按照这篇文档部署的。我主要想测engine所在的主机故障时,engine是否会飘到其它主机上,但测下来没有,只能再把那台主机上电,命令起engine。主机都正常时,迁移engine没问题。

那正常应该是没问题,检查下三台主机上的ovirt-ha-agent这个服务是否都正常

engine重启的话会有一个时间间隔,多等会儿看看

不行的话就得分析分析日志,看看gluster的状态等等排查了

使用iSCSI Gateway的方式成功添加了Data Domain,但增加主机的时候总提示“Host failed to attach one of the storage domains attached to it”,然后后面添加的这个主机呈nonoperational的状态,请问是什么原因?该如何处理?

谢谢。

是已经部署完成之后,添加其它主机遇到的问题吗?这个主机和这个iscsi存储通吗?

对,第一台机器是hosted engine,第二台是ovirt node,两台主机与iscsi存储都是通的,可以ping通。

还发现个问题,DataDomain删除以后,两台物理机的运行就正常了。

Error while executing action Attach Storage Domain: Storage domain cannot be reached. Please ensure it is accessible from the host(s).总是提示这个问题,但从host ping iSCSI gateway是可以ping通的

iscsi那边配权限了吗,用别的iscsi客户端挂一下看看

请问需要给什么配置权限呢?

iscsi的话一般要配主机的iqn号的

是的,两台主机都配置了initiator的iqn

iscsi server端对应的放开权限了是吗,可以在主机上用iscsiadmin命令挂一下试试

两台客户端使用lsblk能看到都挂载了iSCSI存储,但engine管理界面中其中一台还是现实nonoperational..

nonoperational表示engine能够与host通信,但是主机上的配置有问题而无法对主机进行操作。看报错应该就是这台主机不能正常访问这个iscsi存储。

从提示来看确实是,反复试验了几次,两台存储加的iSCSI存储越来越多,就是不能用..

用命令都删除掉再重新加。

ovirt engin 4.3 在部署hosted engine 出现这种错误:

ovirt.hosted_engine_setup:wait for the host to be up

fatal:[localhost]:FALED!=> msg: the ovirt_host_facts module has been renamed to ovirt_host_info,and the renamed one no longer returns ansible_facts

单从这个报错还不大好判断,有没有更多信息

[ INFO ] TASK [ovirt.hosted_engine_setup : Wait for the host to be up]

[ ERROR ] fatal: [localhost]: FAILED! => {“ansible_facts”: {“ovirt_hosts”: [{“address”: “seisys05.bigdata.seisys.cn”, “affinity_labels”: [], “auto_numa_status”: “unknown”, “certificate”: {“organization”: “bigdata.seisys.cn”, “subject”: “O=bigdata.seisys.cn,CN=seisys05.bigdata.seisys.cn”}, “cluster”: {“href”: “/ovirt-engine/api/clusters/b8b18cbc-5374-11ec-bfbd-00163e7e8100”, “id”: “b8b18cbc-5374-11ec-bfbd-00163e7e8100”}, “comment”: “”, “cpu”: {“speed”: 0.0, “topology”: {}}, “device_passthrough”: {“enabled”: false}, “devices”: [], “external_network_provider_configurations”: [], “external_status”: “ok”, “hardware_information”: {“supported_rng_sources”: []}, “hooks”: [], “href”: “/ovirt-engine/api/hosts/bf046948-2ab7-49ac-9198-d4eee00f6c1d”, “id”: “bf046948-2ab7-49ac-9198-d4eee00f6c1d”, “katello_errata”: [], “kdump_status”: “unknown”, “ksm”: {“enabled”: false}, “max_scheduling_memory”: 0, “memory”: 0, “name”: “seisys05.bigdata.seisys.cn”, “network_attachments”: [], “nics”: [], “numa_nodes”: [], “numa_supported”: false, “os”: {“custom_kernel_cmdline”: “”}, “permissions”: [], “port”: 54321, “power_management”: {“automatic_pm_enabled”: true, “enabled”: false, “kdump_detection”: true, “pm_proxies”: []}, “protocol”: “stomp”, “se_linux”: {}, “spm”: {“priority”: 5, “status”: “none”}, “ssh”: {“fingerprint”: “SHA256:TH2t0gMiwmQDsO5jsBV2y3M+dmuFh/yuQvsw+XRnpQA”, “port”: 22}, “statistics”: [], “status”: “installing”, “storage_connection_extensions”: [], “summary”: {“total”: 0}, “tags”: [], “transparent_huge_pages”: {“enabled”: false}, “type”: “ovirt_node”, “unmanaged_networks”: [], “update_available”: false, “vgpu_placement”: “consolidated”}]}, “attempts”: 120, “changed”: false, “deprecations”: [{“msg”: “The ‘ovirt_host_facts’ module has been renamed to ‘ovirt_host_info’, and the renamed one no longer returns ansible_facts”, “version”: “2.13”}]}

你好,群主,按照你说的步骤部署,没有问题,使用3物理机,ovirt-engine不能迁移,手动也不能迁移,其他虚拟机可以正常漂移,一旦engine所在的主机宕机了,engine 就不能启动了,不会漂移到其他主机上,请问该怎么解决

确认下添加其它两台主机时承载的引擎是否选了“部署”这个参数,这个一定要配,否则engine不能迁移,也不能高可用。

谢谢群主,解惑,如果已经部署完毕,并以用于生产环境,现在是否选了“部署”这个参数。

可以用于生产环境,但最好在熟悉之后,ovirt对运维人员还是有一定的技能要求的,目前来看,正常用没有大问题。

谢谢,群主,我看了一下,我是命令行部署的 ovirt-hosted-engine-setup

engine240.com 这台也是放在这三台上面吗?

是的,以hostedengine的方式,也就是作为一个虚机跑

你好,我想请教一个问题,如果我三个服务器都有很多块数据盘,但是搭建GFS的时候,默认界面只能配置一个,这种情况咋处理呢,谢谢,打扰了

你好,针对每个卷每台主机上只能选一个盘,多个盘的情况下可以做几组raid,一组raid建一个卷

好的,谢谢大神了,顺便问一句,这些主机不用配置时间同步吗?

最好自己手动配一下,有外部时钟源就用外部的,没有的话,可以让host跟engine同步

请问下 大佬 卡在 Inject network configuration with guestfish 好久时间了 正常吗

是在vmware虚机里部署的吗?

对的 放了一晚上 早上一点动静都没有

我发现大佬的文章里 原来有写,我再试试!

楼主你好,部署engine出现这个问题,怎么解决

[ INFO ] TASK [ovirt.hosted_engine_setup : Create local VM]

[ INFO ] changed: [localhost]

[ INFO ] TASK [ovirt.hosted_engine_setup : Get local VM IP]

[ ERROR ] fatal: [localhost]: FAILED! => {“attempts”: 90, “changed”: true, “cmd”: “virsh -r net-dhcp-leases default | grep -i 00:16:3e:12:b5:ea | awk ‘{ print $5 }’ | cut -f1 -d’/'”, “delta”: “0:00:00.111365”, “end”: “2021-03-19 15:59:47.731435”, “rc”: 0, “start”: “2021-03-19 15:59:47.620070”, “stderr”: “”, “stderr_lines”: [], “stdout”: “”, “stdout_lines”: []}

[ INFO ] TASK [ovirt.hosted_engine_setup : include_tasks]

[ INFO ] ok: [localhost]

[ INFO ] TASK [ovirt.hosted_engine_setup : Remove local vm dir]

[ INFO ] changed: [localhost]

[ INFO ] TASK [ovirt.hosted_engine_setup : Remove temporary entry in /etc/hosts for the local VM]

[ INFO ] ok: [localhost]

[ INFO ] TASK [ovirt.hosted_engine_setup : Notify the user about a failure]

[ ERROR ] fatal: [localhost]: FAILED! => {“changed”: false, “msg”: “The system may not be provisioned according to the playbook results: please check the logs for the issue, fix accordingly or re-deploy from scratch.\n”}

这个是在物理机还是在虚机里部署的?

楼主好,我是在vmware部署的,在部署hosted engine的时候报这个错误,重新部署了3次,都是相同的报错,请问应该从什么方向排查问题?

[ INFO ] TASK [ovirt.hosted_engine_setup : Execute just a specific set of steps]

[ INFO ] ok: [localhost]

[ INFO ] TASK [ovirt.hosted_engine_setup : Force facts gathering]

[ INFO ] ok: [localhost]

[ INFO ] TASK [ovirt.hosted_engine_setup : Check local VM dir stat]

[ INFO ] ok: [localhost]

[ INFO ] TASK [ovirt.hosted_engine_setup : Enforce local VM dir existence]

[ INFO ] skipping: [localhost]

[ INFO ] TASK [ovirt.hosted_engine_setup : include_tasks]

[ INFO ] ok: [localhost]

[ INFO ] TASK [ovirt.hosted_engine_setup : Obtain SSO token using username/password credentials]

[ INFO ] ok: [localhost]

[ INFO ] TASK [ovirt.hosted_engine_setup : Fetch host facts]

[ INFO ] ok: [localhost]

[ INFO ] TASK [ovirt.hosted_engine_setup : Fetch cluster ID]

[ INFO ] ok: [localhost]

[ INFO ] TASK [ovirt.hosted_engine_setup : Fetch cluster facts]

[ INFO ] ok: [localhost]

[ INFO ] TASK [ovirt.hosted_engine_setup : Fetch Datacenter facts]

[ INFO ] ok: [localhost]

[ INFO ] TASK [ovirt.hosted_engine_setup : Fetch Datacenter ID]

[ INFO ] ok: [localhost]

[ INFO ] TASK [ovirt.hosted_engine_setup : Fetch Datacenter name]

[ INFO ] ok: [localhost]

[ INFO ] TASK [ovirt.hosted_engine_setup : Add NFS storage domain]

[ INFO ] skipping: [localhost]

[ INFO ] TASK [ovirt.hosted_engine_setup : Add glusterfs storage domain]

[ ERROR ] Error: Fault reason is “Operation Failed”. Fault detail is “[Failed to fetch Gluster Volume List]”. HTTP response code is 400.

[ ERROR ] fatal: [localhost]: FAILED! => {“changed”: false, “msg”: “Fault reason is \”Operation Failed\”. Fault detail is \”[Failed to fetch Gluster Volume List]\”. HTTP response code is 400.”}

我的 /etc/hosts如下

10.99.108.15 node1.lidc node1

10.99.108.26 node2.lidc node2

10.99.108.17 node3.lidc node3

10.99.108.25 engine.lidc

192.168.1.15 node1-gs.lidc node1-gs

192.168.1.26 node2-gs.lidc node2-gs

192.168.1.17 node3-gs.lidc node3-gs

192.168.2.15 node1-vm

192.168.2.26 node2-vm

192.168.2.17 node3-vm

我也是遇到同样问题,请问你解决了吗,有什么办法

查看/var/log/ovirt-hosted-engine-setup/ovirt-hosted-engine-setup-ansible-create_storage_domain-202175101558-l6pv9k.log 如下:

2021-08-05 06:16:51,427+0800 INFO ansible skipped {‘status’: ‘SKIPPED’, ‘ansible_task’: u’Add NFS storage domain’, ‘ansible_host’: u’localhost’, ‘ansible_playbook’: u’/usr/share/ovirt-hosted-engine-setup/ansible/trigger_role.yml’, ‘ansible_type’: ‘task’}

2021-08-05 06:16:51,428+0800 DEBUG ansible on_any args kwargs

2021-08-05 06:16:53,008+0800 INFO ansible task start {‘status’: ‘OK’, ‘ansible_task’: u’ovirt.hosted_engine_setup : Add glusterfs storage domain’, ‘ansible_playbook’: u’/usr/share/ovirt-hosted-engine-setup/ansible/trigger_role.yml’, ‘ansible_type’: ‘task’}

2021-08-05 06:16:53,009+0800 DEBUG ansible on_any args TASK: ovirt.hosted_engine_setup : Add glusterfs storage domain kwargs is_conditional:False

2021-08-05 06:16:53,010+0800 DEBUG ansible on_any args localhostTASK: ovirt.hosted_engine_setup : Add glusterfs storage domain kwargs

2021-08-05 06:16:56,160+0800 DEBUG var changed: host “localhost” var “otopi_storage_domain_details_gluster” type “” value: “{

“changed”: false,

“exception”: “Traceback (most recent call last):\n File \”/tmp/ansible_ovirt_storage_domain_payload_Vzc520/ansible_ovirt_storage_domain_payload.zip/ansible/modules/cloud/ovirt/ovirt_storage_domain.py\”, line 792, in main\n File \”/tmp/ansible_ovirt_storage_domain_payload_Vzc520/ansible_ovirt_storage_domain_payload.zip/ansible/module_utils/ovirt.py\”, line 623, in create\n **kwargs\n File \”/usr/lib64/python2.7/site-packages/ovirtsdk4/services.py\”, line 25564, in add\n return self._internal_add(storage_domain, headers, query, wait)\n File \”/usr/lib64/python2.7/site-packages/ovirtsdk4/service.py\”, line 232, in _internal_add\n return future.wait() if wait else future\n File \”/usr/lib64/python2.7/site-packages/ovirtsdk4/service.py\”, line 55, in wait\n return self._code(response)\n File \”/usr/lib64/python2.7/site-packages/ovirtsdk4/service.py\”, line 229, in callback\n self._check_fault(response)\n File \”/usr/lib64/python2.7/site-packages/ovirtsdk4/service.py\”, line 132, in _check_fault\n self._raise_error(response, body)\n File \”/usr/lib64/python2.7/site-packages/ovirtsdk4/service.py\”, line 118, in _raise_error\n raise error\nError: Fault reason is \”Operation Failed\”. Fault detail is \”[Failed to fetch Gluster Volume List]\”. HTTP response code is 400.\n”,

“failed”: true,

“msg”: “Fault reason is \”Operation Failed\”. Fault detail is \”[Failed to fetch Gluster Volume List]\”. HTTP response code is 400.”

}”

2021-08-05 06:16:56,162+0800 DEBUG var changed: host “localhost” var “ansible_play_hosts” type “” value: “[]”

2021-08-05 06:16:56,162+0800 DEBUG var changed: host “localhost” var “play_hosts” type “” value: “[]”

2021-08-05 06:16:56,162+0800 DEBUG var changed: host “localhost” var “ansible_play_batch” type “” value: “[]”

2021-08-05 06:16:56,163+0800 ERROR ansible failed {‘status’: ‘FAILED’, ‘ansible_type’: ‘task’, ‘ansible_task’: u’Add glusterfs storage domain’, ‘ansible_result’: u’type: \nstr: {u\’exception\’: u\’Traceback (most recent call last):\\n File “/tmp/ansible_ovirt_storage_domain_payload_Vzc520/ansible_ovirt_storage_domain_payload.zip/ansible/modules/cloud/ovirt/ovirt_storage_domain.py”, line 792, in main\\n File “/tmp/ansible_ovirt_storage_domain_payload_Vzc520/ansible_ovirt_stora’, ‘task_duration’: 4, ‘ansible_host’: u’localhost’, ‘ansible_playbook’: u’/usr/share/ovirt-hosted-engine-setup/ansible/trigger_role.yml’}

2021-08-05 06:16:56,163+0800 DEBUG ansible on_any args kwargs ignore_errors:None

2021-08-05 06:16:56,167+0800 INFO ansible stats {

“ansible_playbook”: “/usr/share/ovirt-hosted-engine-setup/ansible/trigger_role.yml”,

“ansible_playbook_duration”: “00:56 Minutes”,

“ansible_result”: “type: \nstr: {u’localhost’: {‘ignored’: 0, ‘skipped’: 2, ‘ok’: 14, ‘failures’: 1, ‘unreachable’: 0, ‘rescued’: 0, ‘changed’: 0}}”,

“ansible_type”: “finish”,

“status”: “FAILED”

}

2021-08-05 06:16:56,168+0800 INFO SUMMARY:

Duration Task Name

——– ——–

[ < 1 sec ] Execute just a specific set of steps

[ 00:04 ] Force facts gathering

[ 00:03 ] Check local VM dir stat

[ 00:03 ] Obtain SSO token using username/password credentials

[ 00:03 ] Fetch host facts

[ 00:02 ] Fetch cluster ID

[ 00:03 ] Fetch cluster facts

[ 00:03 ] Fetch Datacenter facts

[ 00:02 ] Fetch Datacenter ID

[ 00:02 ] Fetch Datacenter name

[ FAILED ] Add glusterfs storage domain

2021-08-05 06:16:56,168+0800 DEBUG ansible on_any args kwargs

vmware里部署的话可能会有问题,你找找社区里有篇文章介绍过vmware里部署,但是oVirt版本更新后,遇到的问题可能会不一样了。

另外,这两个服务起不来,不知道是否和这个错误相关?

[root@node1 log]# systemctl status ovirt-ha-broker

● ovirt-ha-broker.service – oVirt Hosted Engine High Availability Communications Broker

Loaded: loaded (/usr/lib/systemd/system/ovirt-ha-broker.service; disabled; vendor preset: disabled)

Active: inactive (dead)

[root@node1 log]# systemctl status ovirt-ha-agent

● ovirt-ha-agent.service – oVirt Hosted Engine High Availability Monitoring Agent

Loaded: loaded (/usr/lib/systemd/system/ovirt-ha-agent.service; disabled; vendor preset: disabled)

Active: inactive (dead)

EXSI虚拟 机中部署,报错如下:

[ INFO ] TASK [ovirt.hosted_engine_setup : Add IPv4 outbound route rules]

[ ERROR ] fatal: [localhost]: FAILED! => {“changed”: true, “cmd”: [“ip”, “rule”, “add”, “from”, “192.168.222.1/24”, “priority”, “101”, “table”, “main”], “delta”: “0:00:00.007739”, “end”: “2021-10-19 17:45:39.660506”, “msg”: “non-zero return code”, “rc”: 2, “start”: “2021-10-19 17:45:39.652767”, “stderr”: “RTNETLINK answers: File exists”, “stderr_lines”: [“RTNETLINK answers: File exists”], “stdout”: “”, “stdout_lines”: []}

你解决了吗?兄弟

我这边想用三台虚拟机测试一下,每台虚拟机有两个盘,一个是nvme0n1,系统盘,一个是sda数据盘:

部署失败,提示下面这些错误,提示创建pv失败的信息,有可能是什么问题导致的?

TASK [gluster.infra/roles/backend_setup : Record for missing devices for phase 2] ***

task path: /etc/ansible/roles/gluster.infra/roles/backend_setup/tasks/vg_create.yml:49

skipping: [hci01.demo.com] => (item={‘cmd’: ‘ test -b /dev/sda && echo “1” || echo “0”; \n’, ‘stdout’: ‘1’, ‘stderr’: ”, ‘rc’: 0, ‘start’: ‘2022-01-28 01:34:12.072394’, ‘end’: ‘2022-01-28 01:34:12.075977’, ‘delta’: ‘0:00:00.003583’, ‘changed’: True, ‘invocation’: {‘module_args’: {‘_raw_params’: ‘ test -b /dev/sda && echo “1” || echo “0”; \n’, ‘_uses_shell’: True, ‘warn’: True, ‘stdin_add_newline’: True, ‘strip_empty_ends’: True, ‘argv’: None, ‘chdir’: None, ‘executable’: None, ‘creates’: None, ‘removes’: None, ‘stdin’: None}}, ‘stdout_lines’: [‘1’], ‘stderr_lines’: [], ‘failed’: False, ‘item’: {‘key’: ‘gluster_vg_sda’, ‘value’: [{‘vgname’: ‘gluster_vg_sda’, ‘pvname’: ‘/dev/sda’}]}, ‘ansible_loop_var’: ‘item’}) => {“ansible_loop_var”: “item”, “changed”: false, “item”: {“ansible_loop_var”: “item”, “changed”: true, “cmd”: ” test -b /dev/sda && echo \”1\” || echo \”0\”; \n”, “delta”: “0:00:00.003583”, “end”: “2022-01-28 01:34:12.075977”, “failed”: false, “invocation”: {“module_args”: {“_raw_params”: ” test -b /dev/sda && echo \”1\” || echo \”0\”; \n”, “_uses_shell”: true, “argv”: null, “chdir”: null, “creates”: null, “executable”: null, “removes”: null, “stdin”: null, “stdin_add_newline”: true, “strip_empty_ends”: true, “warn”: true}}, “item”: {“key”: “gluster_vg_sda”, “value”: [{“pvname”: “/dev/sda”, “vgname”: “gluster_vg_sda”}]}, “rc”: 0, “start”: “2022-01-28 01:34:12.072394”, “stderr”: “”, “stderr_lines”: [], “stdout”: “1”, “stdout_lines”: [“1”]}, “skip_reason”: “Conditional result was False”}

skipping: [hci02.demo.com] => (item={‘cmd’: ‘ test -b /dev/sda && echo “1” || echo “0”; \n’, ‘stdout’: ‘1’, ‘stderr’: ”, ‘rc’: 0, ‘start’: ‘2022-01-28 01:34:11.870627’, ‘end’: ‘2022-01-28 01:34:11.873715’, ‘delta’: ‘0:00:00.003088’, ‘changed’: True, ‘invocation’: {‘module_args’: {‘_raw_params’: ‘ test -b /dev/sda && echo “1” || echo “0”; \n’, ‘_uses_shell’: True, ‘warn’: True, ‘stdin_add_newline’: True, ‘strip_empty_ends’: True, ‘argv’: None, ‘chdir’: None, ‘executable’: None, ‘creates’: None, ‘removes’: None, ‘stdin’: None}}, ‘stdout_lines’: [‘1’], ‘stderr_lines’: [], ‘failed’: False, ‘item’: {‘key’: ‘gluster_vg_sda’, ‘value’: [{‘vgname’: ‘gluster_vg_sda’, ‘pvname’: ‘/dev/sda’}]}, ‘ansible_loop_var’: ‘item’}) => {“ansible_loop_var”: “item”, “changed”: false, “item”: {“ansible_loop_var”: “item”, “changed”: true, “cmd”: ” test -b /dev/sda && echo \”1\” || echo \”0\”; \n”, “delta”: “0:00:00.003088”, “end”: “2022-01-28 01:34:11.873715”, “failed”: false, “invocation”: {“module_args”: {“_raw_params”: ” test -b /dev/sda && echo \”1\” || echo \”0\”; \n”, “_uses_shell”: true, “argv”: null, “chdir”: null, “creates”: null, “executable”: null, “removes”: null, “stdin”: null, “stdin_add_newline”: true, “strip_empty_ends”: true, “warn”: true}}, “item”: {“key”: “gluster_vg_sda”, “value”: [{“pvname”: “/dev/sda”, “vgname”: “gluster_vg_sda”}]}, “rc”: 0, “start”: “2022-01-28 01:34:11.870627”, “stderr”: “”, “stderr_lines”: [], “stdout”: “1”, “stdout_lines”: [“1”]}, “skip_reason”: “Conditional result was False”}

skipping: [hci03.demo.com] => (item={‘cmd’: ‘ test -b /dev/sda && echo “1” || echo “0”; \n’, ‘stdout’: ‘1’, ‘stderr’: ”, ‘rc’: 0, ‘start’: ‘2022-01-28 01:34:11.833917’, ‘end’: ‘2022-01-28 01:34:11.836439’, ‘delta’: ‘0:00:00.002522’, ‘changed’: True, ‘invocation’: {‘module_args’: {‘_raw_params’: ‘ test -b /dev/sda && echo “1” || echo “0”; \n’, ‘_uses_shell’: True, ‘warn’: True, ‘stdin_add_newline’: True, ‘strip_empty_ends’: True, ‘argv’: None, ‘chdir’: None, ‘executable’: None, ‘creates’: None, ‘removes’: None, ‘stdin’: None}}, ‘stdout_lines’: [‘1’], ‘stderr_lines’: [], ‘failed’: False, ‘item’: {‘key’: ‘gluster_vg_sda’, ‘value’: [{‘vgname’: ‘gluster_vg_sda’, ‘pvname’: ‘/dev/sda’}]}, ‘ansible_loop_var’: ‘item’}) => {“ansible_loop_var”: “item”, “changed”: false, “item”: {“ansible_loop_var”: “item”, “changed”: true, “cmd”: ” test -b /dev/sda && echo \”1\” || echo \”0\”; \n”, “delta”: “0:00:00.002522”, “end”: “2022-01-28 01:34:11.836439”, “failed”: false, “invocation”: {“module_args”: {“_raw_params”: ” test -b /dev/sda && echo \”1\” || echo \”0\”; \n”, “_uses_shell”: true, “argv”: null, “chdir”: null, “creates”: null, “executable”: null, “removes”: null, “stdin”: null, “stdin_add_newline”: true, “strip_empty_ends”: true, “warn”: true}}, “item”: {“key”: “gluster_vg_sda”, “value”: [{“pvname”: “/dev/sda”, “vgname”: “gluster_vg_sda”}]}, “rc”: 0, “start”: “2022-01-28 01:34:11.833917”, “stderr”: “”, “stderr_lines”: [], “stdout”: “1”, “stdout_lines”: [“1”]}, “skip_reason”: “Conditional result was False”}

TASK [gluster.infra/roles/backend_setup : Create volume groups] ****************

task path: /etc/ansible/roles/gluster.infra/roles/backend_setup/tasks/vg_create.yml:59

failed: [hci01.demo.com] (item={‘key’: ‘gluster_vg_sda’, ‘value’: [{‘vgname’: ‘gluster_vg_sda’, ‘pvname’: ‘/dev/sda’}]}) => {“ansible_loop_var”: “item”, “changed”: false, “err”: ” Cannot use /dev/sda: device is rejected by filter config\n”, “item”: {“key”: “gluster_vg_sda”, “value”: [{“pvname”: “/dev/sda”, “vgname”: “gluster_vg_sda”}]}, “msg”: “Creating physical volume ‘/dev/sda’ failed”, “rc”: 5}

failed: [hci02.demo.com] (item={‘key’: ‘gluster_vg_sda’, ‘value’: [{‘vgname’: ‘gluster_vg_sda’, ‘pvname’: ‘/dev/sda’}]}) => {“ansible_loop_var”: “item”, “changed”: false, “err”: ” Cannot use /dev/sda: device is rejected by filter config\n”, “item”: {“key”: “gluster_vg_sda”, “value”: [{“pvname”: “/dev/sda”, “vgname”: “gluster_vg_sda”}]}, “msg”: “Creating physical volume ‘/dev/sda’ failed”, “rc”: 5}

failed: [hci03.demo.com] (item={‘key’: ‘gluster_vg_sda’, ‘value’: [{‘vgname’: ‘gluster_vg_sda’, ‘pvname’: ‘/dev/sda’}]}) => {“ansible_loop_var”: “item”, “changed”: false, “err”: ” Cannot use /dev/sda: device is rejected by filter config\n”, “item”: {“key”: “gluster_vg_sda”, “value”: [{“pvname”: “/dev/sda”, “vgname”: “gluster_vg_sda”}]}, “msg”: “Creating physical volume ‘/dev/sda’ failed”, “rc”: 5}

pv创建失败基本上都是磁盘被占用引起的。常见的磁盘被占用情况有以下几种: (1)磁盘是否被multipath管理了。 (2)磁盘是否做过其它lvm的pv盘。(3)磁盘是否被lvm filter处理了(这个情况在centos8上常见)。您可以手工pvcreate /dev/sda, 看看错误提示是什么。然后,再pvremove /dev/sda即可。

这个版本的ovirt创建虚机的话,单磁盘最大支持8T,怎么可以再大点呢?建文件共享服务器,需要大容量磁盘。新版本的有改善么?

我在部署出现如下问题:

[ INFO ] TASK [ovirt.ovirt.hosted_engine_setup : Find the local appliance image]

[ INFO ] ok: [localhost -> localhost]

[ INFO ] TASK [ovirt.ovirt.hosted_engine_setup : Set local_vm_disk_path]

[ INFO ] ok: [localhost -> localhost]

[ INFO ] TASK [ovirt.ovirt.hosted_engine_setup : Give the vm time to flush dirty buffers]

[ INFO ] ok: [localhost -> localhost]

[ INFO ] TASK [ovirt.ovirt.hosted_engine_setup : Copy engine logs]

[ INFO ] changed: [localhost]

[ INFO ] TASK [ovirt.ovirt.hosted_engine_setup : Notify the user about a failure]

[ ERROR ] fatal: [localhost]: FAILED! => {“changed”: false, “msg”: “There was a failure deploying the engine on the local engine VM. The system may not be provisioned according to the playbook results: please check the logs for the issue, fix accordingly or re-deploy from scratch.\n”}